100 changed files with 7654 additions and 0 deletions

Split View

Diff Options

-

+40 -0examples/alexk-lcr/ASSEMBLING.md

-

+90 -0examples/alexk-lcr/CONFIGURING.md

-

+82 -0examples/alexk-lcr/INSTALLATION.md

-

+174 -0examples/alexk-lcr/README.md

-

+80 -0examples/alexk-lcr/RECORDING.md

-

+135 -0examples/alexk-lcr/assets/simulation/lift_cube.xml

-

+135 -0examples/alexk-lcr/assets/simulation/pick_place_cube.xml

-

+137 -0examples/alexk-lcr/assets/simulation/push_cube.xml

-

+135 -0examples/alexk-lcr/assets/simulation/reach_cube.xml

-

+141 -0examples/alexk-lcr/assets/simulation/stack_two_cubes.xml

-

+328 -0examples/alexk-lcr/bus.py

-

+0 -0examples/alexk-lcr/configs/.gitkeep

-

+213 -0examples/alexk-lcr/configure.py

-

+74 -0examples/alexk-lcr/graphs/bi_teleop_real.yml

-

+40 -0examples/alexk-lcr/graphs/mono_replay_real.yml

-

+37 -0examples/alexk-lcr/graphs/mono_teleop_real.yml

-

+70 -0examples/alexk-lcr/graphs/mono_teleop_real_and_simu.yml

-

+43 -0examples/alexk-lcr/graphs/mono_teleop_simu.yml

-

+119 -0examples/alexk-lcr/graphs/record_mono_teleop_real.yml

-

+148 -0examples/alexk-lcr/nodes/interpolate_lcr_to_lcr.py

-

+114 -0examples/alexk-lcr/nodes/interpolate_lcr_to_record.py

-

+133 -0examples/alexk-lcr/nodes/interpolate_lcr_to_simu_lcr.py

-

+83 -0examples/alexk-lcr/nodes/interpolate_replay_to_lcr.py

-

+64 -0examples/aloha/ASSEMBLING.md

-

+95 -0examples/aloha/CONFIGURING.md

-

+87 -0examples/aloha/INSTALLATION.md

-

+42 -0examples/aloha/README.md

-

+17 -0examples/aloha/RECORDING.md

-

+13 -0examples/aloha/benchmark/python/README.md

-

+325 -0examples/aloha/benchmark/python/dynamixel.py

-

+191 -0examples/aloha/benchmark/python/robot.py

-

+24 -0examples/aloha/benchmark/python/teleoperate_real_robot.py

-

+103 -0examples/aloha/benchmark/ros2/README.md

-

+9 -0examples/aloha/benchmark/ros2/config/master_modes_left.yaml

-

+9 -0examples/aloha/benchmark/ros2/config/master_modes_right.yaml

-

+17 -0examples/aloha/benchmark/ros2/config/puppet_modes_left.yaml

-

+17 -0examples/aloha/benchmark/ros2/config/puppet_modes_right.yaml

-

+4 -0examples/aloha/benchmark/ros2/dataflow.yml

-

+22 -0examples/aloha/benchmark/ros2/setup_ros2.sh

-

+90 -0examples/aloha/benchmark/ros2/teleop.py

-

+9 -0examples/aloha/benchmark/rust/README.md

-

+141 -0examples/aloha/graphs/eval.yml

-

+38 -0examples/aloha/graphs/gym.yml

-

+162 -0examples/aloha/graphs/record_2arms_teleop.yml

-

+32 -0examples/aloha/graphs/record_teleop.yml

-

+61 -0examples/aloha/graphs/replay.yml

-

+4 -0examples/aloha/hardware_config/99-fixed-interbotix-udev.rules

-

+24 -0examples/aloha/hardware_config/99-interbotix-udev.rules

-

+13 -0examples/aloha/nodes/aloha-client/Cargo.toml

-

+104 -0examples/aloha/nodes/aloha-client/src/main.rs

-

+15 -0examples/aloha/nodes/aloha-teleop/Cargo.toml

-

+38 -0examples/aloha/nodes/aloha-teleop/src/_main.rs

-

+242 -0examples/aloha/nodes/aloha-teleop/src/main.rs

-

+66 -0examples/aloha/nodes/gym_dora_node.py

-

+31 -0examples/aloha/nodes/keyboard_node.py

-

+78 -0examples/aloha/nodes/lerobot_webcam_saver.py

-

+234 -0examples/aloha/nodes/llm_op.py

-

+53 -0examples/aloha/nodes/plot_node.py

-

+17 -0examples/aloha/nodes/policy.py

-

+28 -0examples/aloha/nodes/realsense_node.py

-

+27 -0examples/aloha/nodes/replay.py

-

+45 -0examples/aloha/nodes/webcam.py

-

+59 -0examples/aloha/nodes/whisper_node.py

-

+24 -0examples/aloha/tests/test_realsense.py

-

+63 -0examples/lebai/graphs/dataflow.yml

-

+128 -0examples/lebai/graphs/dataflow_full.yml

-

+88 -0examples/lebai/graphs/keyboard_teleop.yml

-

+115 -0examples/lebai/graphs/qwenvl2.yml

-

+1 -0examples/lebai/graphs/train.sh

-

+96 -0examples/lebai/graphs/voice_teleop.yml

-

+60 -0examples/lebai/nodes/interpolation.py

-

+49 -0examples/lebai/nodes/key_interpolation.py

-

+21 -0examples/lebai/nodes/prompt_interpolation.py

-

+1 -0examples/lebai/nodes/vlm_prompt.py

-

+22 -0examples/lebai/nodes/voice_interpolation.py

-

+103 -0examples/reachy/README.md

-

+86 -0examples/reachy/nodes/gym_dora_node.py

-

+31 -0examples/reachy/nodes/keyboard_node.py

-

+78 -0examples/reachy/nodes/lerobot_webcam_saver.py

-

+56 -0examples/reachy/nodes/plot_node.py

-

+156 -0examples/reachy/nodes/reachy_client.py

-

+73 -0examples/reachy/nodes/reachy_vision_client.py

-

+21 -0examples/reachy/nodes/replay_node.py

-

+103 -0examples/reachy1/README.md

-

+80 -0examples/reachy1/graphs/eval.yml

-

+74 -0examples/reachy1/graphs/qwenvl2.yml

-

+73 -0examples/reachy1/graphs/qwenvl2_recorder.yml

-

+86 -0examples/reachy1/nodes/gym_dora_node.py

-

+41 -0examples/reachy1/nodes/key_interpolation.py

-

+31 -0examples/reachy1/nodes/keyboard_node.py

-

+78 -0examples/reachy1/nodes/lerobot_webcam_saver.py

-

+56 -0examples/reachy1/nodes/plot_node.py

-

+156 -0examples/reachy1/nodes/reachy_client.py

-

+73 -0examples/reachy1/nodes/reachy_vision_client.py

-

+21 -0examples/reachy1/nodes/replay_node.py

-

+72 -0examples/reachy1/nodes/text_interpolation.py

-

+12 -0examples/so100/ASSEMBLING.md

-

+75 -0examples/so100/CONFIGURING.md

-

+82 -0examples/so100/INSTALLATION.md

-

+68 -0examples/so100/README.md

+ 40

- 0

examples/alexk-lcr/ASSEMBLING.md

View File

| @@ -0,0 +1,40 @@ | |||

| # Dora pipeline Robots | |||

| AlexK Low Cost Robot is a low-cost robotic arm that can be teleoperated using a similar arm. This repository contains | |||

| the Dora pipeline to manipulate the arms, the camera, and record/replay episodes with LeRobot. | |||

| ## Assembling | |||

| **Please read the instructions carefully before buying or printing the parts.** | |||

| You will need to get the parts for a Follower arm and a Leader: | |||

| - [AlexK Follower Low Cost Robot](https://github.com/AlexanderKoch-Koch/low_cost_robot/?tab=readme-ov-file#follower-arm) | |||

| - [AlexK Leader Low Cost Robot](https://github.com/AlexanderKoch-Koch/low_cost_robot/?tab=readme-ov-file#follower-arm) | |||

| You **must** assemble the arm **with the extension** to be able to do some of the tasks. | |||

| You then need to print the Follower arm and the Leader arm. The STL files are: | |||

| - [AlexK Follower Low Cost Robot](https://github.com/AlexanderKoch-Koch/low_cost_robot/tree/main/hardware/follower/stl) | |||

| - [AlexK Leader Low Cost Robot](https://github.com/AlexanderKoch-Koch/low_cost_robot/tree/main/hardware/leader/stl) | |||

| Some parts **must** be replaced by the ones in this repository: | |||

| - [Dora-LeRobot Base Leader Low Cost Robot](assets/stl/LEADER_Base.stl) | |||

| If you struggle buying XL330 Frame or XL330/XL430 Idler Wheel, here are STL files that can be printed instead: | |||

| - [XL330 Frame](assets/stl/XL330_Frame.stl) | |||

| - [XL330 Idler Wheel](assets/stl/XL330_Idler_Wheel.stl) | |||

| - [XL430 Idler Wheel](assets/stl/XL430_Idler_Wheel.stl) | |||

| Please then follow the [YouTube Tutorial by Alexander Koch](https://youtu.be/RckrXOEoWrk?si=ZXDnnlF6BQd_o7v8) to | |||

| assemble the arm correctly. | |||

| Note that the tutorial is for the arm without the extension, so you will have to adapt the assembly. | |||

| Then you can place the two cameras on your desk, following this [image]() | |||

| ## License | |||

| This library is licensed under the [Apache License 2.0](../../LICENSE). | |||

+ 90

- 0

examples/alexk-lcr/CONFIGURING.md

View File

| @@ -0,0 +1,90 @@ | |||

| # Dora pipeline Robots | |||

| AlexK Low Cost Robot is a low-cost robotic arm that can be teleoperated using a similar arm. This repository contains | |||

| the Dora pipeline to manipulate the arms, the camera, and record/replay episodes with LeRobot. | |||

| ## Configuring | |||

| Once you have assembled the robot, and installed the required software, you can configure the robot. It's essential to | |||

| configure it | |||

| correctly for the robot to work as expected. Here are the reasons why you need to configure the robot: | |||

| - You may have done some 'mistakes' during the assembly, like inverting the motors, or changing the offsets by rotating | |||

| the motors before assembling the robot. So your configuration will be different from the one we used to record the | |||

| data set. | |||

| - The recording pipeline needs to know the position of the motors to record the data set correctly. If the motors are | |||

| calibrated differently, the data set will be incorrect. | |||

| **Please read the instructions carefully before configuring the robot.** | |||

| The first thing to do is to configure the Servo BUS: | |||

| - Setting all the servos to the same baud rate (1M). | |||

| - Setting the ID of the servos from the base (1) to the gripper (6) for the Follower and Leader arms. | |||

| Those steps can be done using the official wizard provided by the | |||

| manufacturer [ROBOTIS](https://emanual.robotis.com/docs/en/software/dynamixel/dynamixel_wizard2/). | |||

| After that, you need to configure the homing offsets and drive mode to have the same behavior for every user. We | |||

| recommend using our on-board tool to set all of that automatically: | |||

| - Connect the Follower arm to your computer. | |||

| - Retrieve the device port from the official wizard. | |||

| - Run the configuration tool with the following command and follow the instructions: | |||

| ```bash | |||

| cd dora-lerobot/ | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| python ./robots/alexk-lcr/configure.py --port /dev/ttyUSB0 --follower --left # (or right) | |||

| ``` | |||

| **Note:** change `/dev/ttyUSB0` to the device port you retrieved from the official wizard (like `COM3` on Windows). | |||

| **Note:** The configuration tool will disable all torque so you can move the arm freely to the Position 1. | |||

| **Note:** You will be asked to set the arm in two different positions. The two positions are: | |||

|  | |||

| **Node:** You will be asked the path of the configuration file, you can press enter to use the default one. | |||

| - Repeat the same steps for the Leader arm: | |||

| ```bash | |||

| python ./robots/alexk-lcr/configure.py --port /dev/ttyUSB1 --leader --left # (or right) | |||

| ``` | |||

| **Note:** change `/dev/ttyUSB1` to the device port you retrieved from the official wizard (like `COM4` on Windows). | |||

| **Note:** The configuration tool will disable all torque so you can move the arm freely to the Position 1. | |||

| **Node:** You will be asked the path of the configuration file, you can press enter to use the default one. | |||

| After following the guide, you should have the following configuration: | |||

|  | |||

| This configuration has to be exported into environment variables inside the graph file. Here is an example of the | |||

| configuration: | |||

| ```YAML | |||

| nodes: | |||

| - id: lcr-follower | |||

| env: | |||

| PORT: /dev/ttyUSB0 | |||

| CONFIG: ../configs/follower.left.json # relative path to `./robots/alexk-lcr/configs/follower.json` | |||

| - id: lcr-to-lcr | |||

| env: | |||

| LEADER_CONTROL: ../configs/leader.left.json | |||

| FOLLOWER_CONTROL: ../configs/follower.left.json | |||

| ``` | |||

| ## License | |||

| This library is licensed under the [Apache License 2.0](../../LICENSE). | |||

+ 82

- 0

examples/alexk-lcr/INSTALLATION.md

View File

| @@ -0,0 +1,82 @@ | |||

| # Dora pipeline Robots | |||

| AlexK Low Cost Robot is a low-cost robotic arm that can be teleoperated using a similar arm. This repository contains | |||

| the Dora pipeline to manipulate the arms, the camera, and record/replay episodes with LeRobot. | |||

| ## Installation | |||

| Dataflow-oriented robotic application (Dora) is a framework that makes creation of robotic applications fast and simple. | |||

| See [Dora repository](https://github.com/dora-rs/dora). | |||

| **Please read the instructions carefully before installing the required software and environment to run the robot.** | |||

| You must install Dora before attempting to run the AlexK Low Cost Robot pipeline. Here are the steps to install Dora: | |||

| - Install Rust by following the instructions at [Rustup](https://rustup.rs/). (You may need to install Visual Studio C++ | |||

| build tools on Windows.) | |||

| - Install Dora by running the following command: | |||

| ```bash | |||

| cargo install dora-cli | |||

| ``` | |||

| Now you're ready to run Rust dataflow applications! We decided to only make Python dataflow for AlexK Low Cost Robot, so | |||

| you may need to setup your Python environment: | |||

| - Install Python 3.12 or later by following the instructions at [Python](https://www.python.org/downloads/). | |||

| - Clone this repository by running the following command: | |||

| ```bash | |||

| git clone https://github.com/dora-rs/dora-lerobot | |||

| ``` | |||

| - Open a bash terminal and navigate to the repository by running the following command: | |||

| ```bash | |||

| cd dora-lerobot | |||

| ``` | |||

| - Create a virtual environment by running the following command (you can find where is all your pythons executable with | |||

| the command `whereis python3` on Linux, on default for Windows it's located | |||

| in `C:\Users\<User>\AppData\Local\Programs\Python\Python3.12\python.exe)`): | |||

| ```bash | |||

| path_to_your_python3_executable -m venv venv | |||

| ``` | |||

| - Activate the virtual environment and install the required Python packages by running the following command: | |||

| ```bash | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| pip install -r robots/alexk-lcr/requirements.txt | |||

| ``` | |||

| If you want to install the required Python packages in development mode, you can run the following command, but you will | |||

| have to avoid using `dora build` during execution procedure: | |||

| ```bash | |||

| pip install -r robots/alexk-lcr/development.txt # You **MUST** be inside dora-lerobot to run this command, not robots/alexk-lcr | |||

| ``` | |||

| **Note**: You're totally free to use your own Python environment, a Conda environment, or whatever you prefer, you will | |||

| have to activate | |||

| your custom python environment before running `dora up && dora start [graph].yml`. | |||

| In order to record episodes, you need ffmpeg installed on your system. You can download it from | |||

| the [official website](https://ffmpeg.org/download.html). If you're on Windows, you can download the latest build | |||

| from [here](https://www.gyan.dev/ffmpeg/builds/). You can | |||

| extract the zip file and add the `bin` folder to your PATH. | |||

| If you're on Linux, you can install ffmpeg using the package manager of your distribution. ( | |||

| e.g `sudo apt install ffmpeg` on Ubuntu, `brew install ffmpeg` on macOS) | |||

| ## License | |||

| This library is licensed under the [Apache License 2.0](../../LICENSE). | |||

+ 174

- 0

examples/alexk-lcr/README.md

View File

| @@ -0,0 +1,174 @@ | |||

| # Dora pipeline Robots | |||

| AlexK Low Cost Robot is a low-cost robotic arm that can be teleoperated using a similar arm. This repository contains | |||

| the Dora pipeline to record episodes for LeRobot. | |||

| ## Assembling | |||

| Check the [ASSEMBLING.md](ASSEMBLING.md) file for instructions on how to assemble the robot from scratch using the | |||

| provided parts from the [AlexK Low Cost Robot](https://github.com/AlexanderKoch-Koch/low_cost_robot) | |||

| ## Installation | |||

| Check the [INSTALLATION.md](INSTALLATION.md) file for instructions on how to install the required software and | |||

| environment | |||

| to run the robot. | |||

| ## Configuring | |||

| Check the [CONFIGURING.md](CONFIGURING.md) file for instructions on how to configure the robot to record episodes for | |||

| LeRobot and teleoperate the robot. | |||

| ## Recording | |||

| It's probably better to check the [examples](#examples) below before trying to record episodes. It will give you a | |||

| better | |||

| understanding of how Dora works. | |||

| Check the [RECORDING.md](RECORDING.md) file for instructions on how to record episodes for LeRobot. | |||

| ## Examples | |||

| There are also some other example applications in the `graphs` folder. Have fun! | |||

| Here is a list of the available examples: | |||

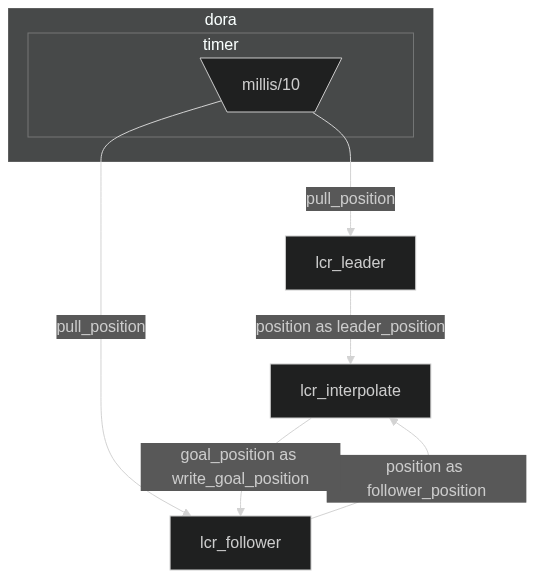

| - `mono_teleop_real.yml`: A simple real teleoperation pipeline that allows you to control a follower arm using a leader | |||

| arm. It | |||

| does not record the episodes, so you don't need to have a camera. | |||

| You must configure the arms, retrieve the device port, and modify the file `mono_teleop_real.yml` to set the correct | |||

| environment variables. (e.g. `PORT` and `CONFIG`, `LEADER_CONTROL` and `FOLLOWER_CONTROL`) | |||

| ```bash | |||

| cd dora-lerobot/ | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| dora build ./robots/alexk-lcr/graphs/mono_teleop_real.yml # Only the first time, it will install all the requirements if needed | |||

| dora up | |||

| dora start ./robots/alexk-lcr/graphs/mono_teleop_real.yml | |||

| ``` | |||

| [](https://mermaid.live/edit#pako:eNqVUsFOxCAQ_RUy591Urz14MF496W0xZCzTlkihmUI2ZrP_LtDtutomRg4w83jvMcCcoPGaoAZxGa31x6ZHDuL1UTohbMPKEmriJTMuEI_eYqAFar1NskyZ4nvHOPZCKaU9Y1rEIQdvmXu7G8xAfJkzqUSFJUQWVAWoBmOtmar7u4OU17gqPHJaujJtK8R-L8ZorRr9ZILxLgEPGxdaqi_8hYqTWPC1fuMJZsvfFjP6p8H_qv9-7dWHZFHn8UaUijiyCaR-wmsv2EE6f0CjUzecsreE0NNAEuoUauQPCdKdEw9j8C-froE6cKQdsI9dD3WLdkpZHHWq5Mlg-urhipI2wfPz3Gyl585fka3hkA) | |||

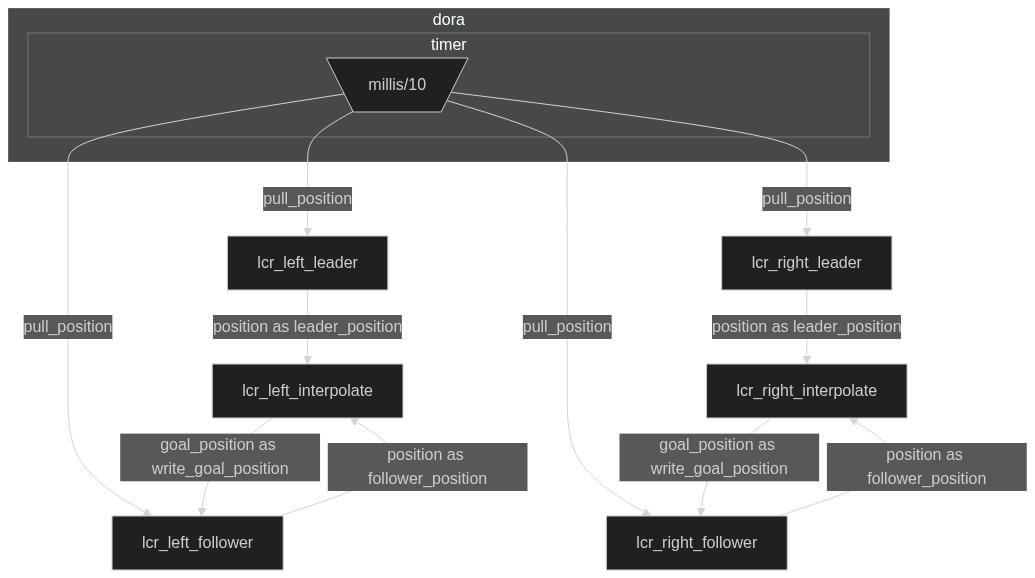

| - `bi_teleop_real.yml`: A simple real tele operation pipeline that allows you to control two follower arm using two | |||

| leader arm | |||

| (left and right). It does not record the episodes, so you don't need to have a camera. | |||

| You must configure the arms, retrieve the device port, and modify the file `bi_teleop_real.yml` to set the correct | |||

| environment variables. (e.g. `PORT` and `CONFIG`) | |||

| ```bash | |||

| cd dora-lerobot/ | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| dora build ./robots/alexk-lcr/graphs/bi_teleop_real.yml # Only the first time, it will install all the requirements if needed | |||

| dora up | |||

| dora start ./robots/alexk-lcr/graphs/bi_teleop_real.yml | |||

| ``` | |||

| [](https://mermaid.live/edit#pako:eNqlVMFugzAM_ZUo51ZsVw47TLvutN2aKsqIgWghQSZRNVX99yWhtAXBNjoOxrz4vdgmzpEWVgLNKTk_pbaHohboyPszM4ToArmG0gUjJOAIUsYBtlYLByO8tDqoXINRVfVUoMdmFPqFq0TnPyoUbU0459KiCC-yi84-Mm5XnWoAzzYGJS9FERIJWQKyRmmtuuzxYcfYxc9SHBjJTDLzDLLdktZrzVvbKaesCcDTjy0a6kjMgSQ6MuALSkud7XeYivXo36TuKGv6O6eykV5ZcUMPOR1QOeBjeFF1XVLLx2l9t385huv6PSt2T23zA_Sflk916YaGjBqhZJj9Y9yHUVdDA4zmwZUCPxll5hTihHf27csUNHfoYUPR-qqmeSl0F758K0M-L0qEMWwuKEjlLL72V0u6YU7fOOqbHg) | |||

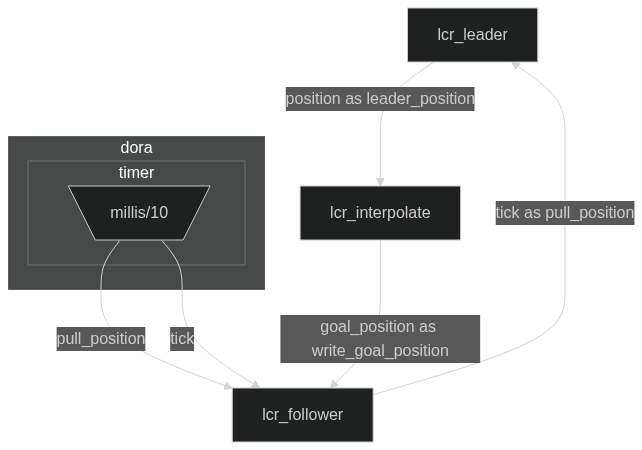

| - `mono_teleop_simu.yml`: A simple simulation tele operation pipeline that allows you to control a simulated follower | |||

| arm using a leader arm. It does not record the episodes, so you don't need to have a camera. | |||

| You must configure the arms, retrieve the device port, and modify the file `mono_teleop_simu.yml` to set the correct | |||

| environment variables. (e.g. `PORT` and `CONFIG`) | |||

| ```bash | |||

| cd dora-lerobot/ | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| dora build ./robots/alexk-lcr/graphs/mono_teleop_simu.yml # Only the first time, it will install all the requirements if needed | |||

| dora up | |||

| dora start ./robots/alexk-lcr/graphs/mono_teleop_simu.yml | |||

| ``` | |||

| [](https://mermaid.live/edit#pako:eNp1UstuwyAQ_JUV50Rurz70UPXaU3sLFdqatY2CwcKgqIry711w4ubhcoDdYWZ3eBxF4zWJWsB5tNYfmh5DhM9X6QBsE5Ql1BQumXGRwugtRrpArbcsy5QpfXcBxx6UUtoH5AV2OfjK3OvdaAYK5zmTSlRYAFlQFaAajLVmqp6fdlIucVV45LR0Zbp1AdstRNPsAScYk7Vq9JOJxjveeFk50Jxl1UJk5Yw-au-Ov2a1lFpt_HdR_yuL9TXBXffM7TxedWHXh2AiqVv4sZbYCG47oNH88sdcW4rY00BS1BxqDHsppDsxD1P0Hz-uEXUMiTYi-NT1om7RTpylUbOTN4P8rMOCkjbRh_f5Y5X_dfoF5ZjY9g) | |||

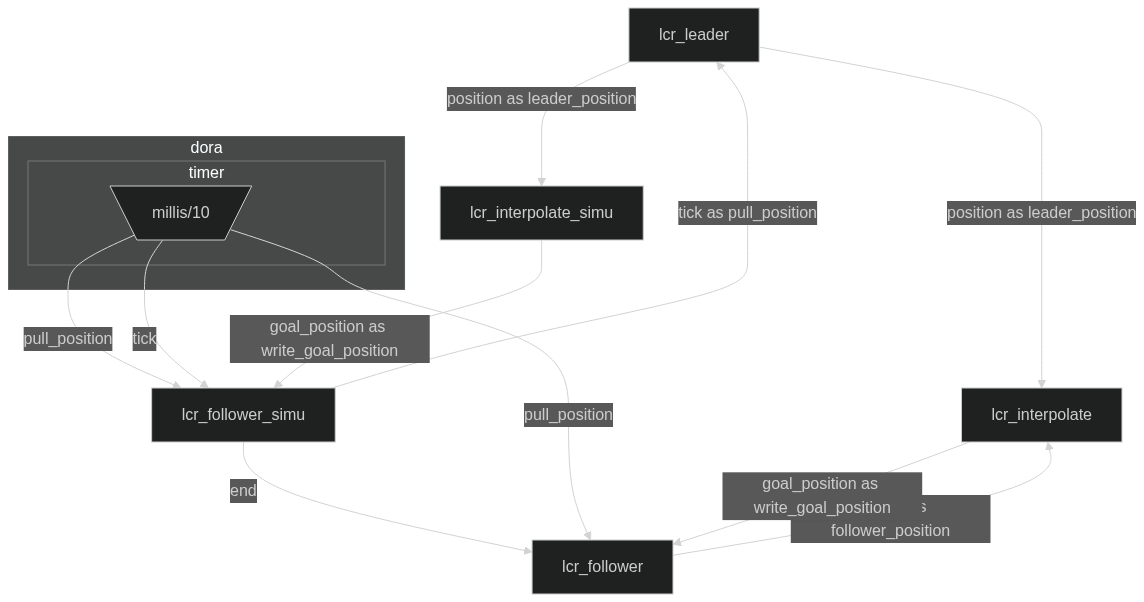

| - `mono_teleop_real_and_simu.yml`: A simple real and simulation tele operation pipeline that allows you to control a | |||

| simulated and real follower arm using a real leader arm. It does not record the episodes, so you don't need to have a | |||

| camera. | |||

| You must configure the arms, retrieve the device port, and modify the file `mono_teleop_real_and_simu.yml` to set the | |||

| correct | |||

| environment variables. (e.g. `PORT` and `CONFIG`) | |||

| ```bash | |||

| cd dora-lerobot/ | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| dora build ./robots/alexk-lcr/graphs/mono_teleop_real_and_simu.yml # Only the first time, it will install all the requirements if needed | |||

| dora up | |||

| dora start ./robots/alexk-lcr/graphs/mono_teleop_real_and_simu.yml | |||

| ``` | |||

| [](https://mermaid.live/edit#pako:eNqdU8luwyAQ_RXEOZHbqw89VL321N5ChajBMQqLxaKoivLvHXCM3IS0lX3Aw-O9YRbmhDvLBW4xuny9ssduYC6g92diEFKdo0owLty8kyYIN1rFgpih3iqQVSnUSx2veRfQx8-9Y-OAKKXcOgY_tEvGRxIsT4PUoJrWRMpWZiGUBE0GGi2Vkr55fNgRUuwm84ThxOSlEgrablGQ3QExj8aoFB2tl0FaAwdPlRLM4qQrVNAWpzf6StEml9cuJvRfDm5SgPQKf9mSWoXyvdVUf2lmEu0tW4gg4qOT0Oaf8D1fq3Muz2hdLn_Kc_fvqmrBrK5FVuMNhhg0kxxm75TuIDgMQguCWzA5cweCiTkDj8Vg375Mh9vgothgZ-N-wG3PlIddHDlE9CIZzIouqOAyWPc6jXae8PM3I_doSQ) | |||

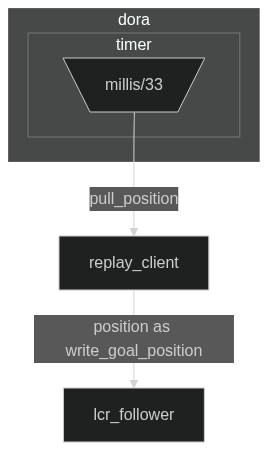

| - `mono_replay_real.yml`: A simple real replay pipeline that allows you to replay a recorded episode. | |||

| You must configure the dataset path and episode index in the file `mono_replay_real.yml` to set the correct | |||

| environment variables. (e.g. `PATH`, `EPISODE`). You must also configure the follower arm, retrieve the device port, and | |||

| modify the file `mono_replay_real.yml` to set the correct environment variables. (e.g. `PORT` and `CONFIG`) | |||

| ```bash | |||

| cd dora-lerobot/ | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| dora build ./robots/alexk-lcr/graphs/mono_replay_real.yml # Only the first time, it will install all the requirements if needed | |||

| dora up | |||

| dora start ./robots/alexk-lcr/graphs/mono_replay_real.yml | |||

| ``` | |||

| [](https://mermaid.live/edit#pako:eNptkbFuAyEMhl_F8pzohmw3dKiydmq3UCH38N2hcoB8oCiK8u4BmkZNWwbz8_PZCPuMQzCMPcJtjS4ch5kkwduz8gDC0dFJD86yT9Vwg-gxuIKxKL_mj0kozqC1NkGobHCo4r2yP2-TXVhusUJNNQqgJnTN6BbrnF273e6g1F13jWNvlG_h_wzYbiFm53QMq002-GI8_f3Ag9FyvnFa4Sg2sZ4C_ary-GvcYHl5IWtK5861qMI088IK-yINyadC5S-Fo5zC68kP2CfJvEEJeZqxH8mt5ZSjocR7S6VNy91lY1OQl6_BtPlcrmjBlKg) | |||

| ## License | |||

| This library is licensed under the [Apache License 2.0](../../LICENSE). | |||

+ 80

- 0

examples/alexk-lcr/RECORDING.md

View File

| @@ -0,0 +1,80 @@ | |||

| # Dora pipeline Robots | |||

| AlexK Low Cost Robot is a low-cost robotic arm that can be teleoperated using a similar arm. This repository contains | |||

| the Dora pipeline to manipulate the arms, the camera, and record/replay episodes with LeRobot. | |||

| ## Recording | |||

| This section explains how to record episodes for LeRobot using the AlexK Low Cost Robot. | |||

| Recording is the process of tele operating the robot and saving the episodes to a dataset. The dataset is used to train | |||

| the robot to perform tasks autonomously. | |||

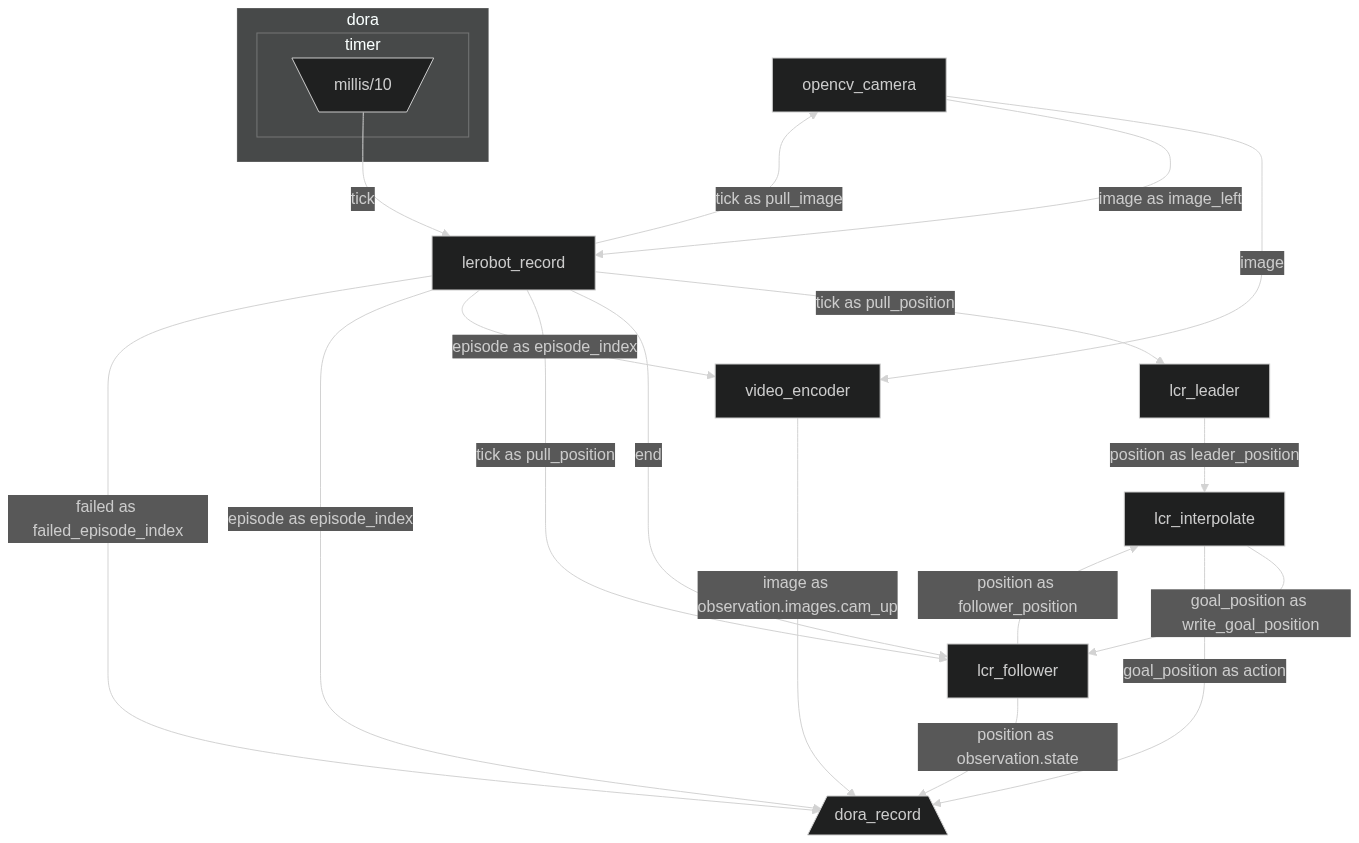

| To record episodes with Dora, you have to configure the Dataflow `record_mono_teleop_real.yml` file to integrate the | |||

| arms and the camera. The graph file is located in the `graphs` folder. | |||

| Make sure to: | |||

| - Adjust the serial ports of `lcr-leader` and `lcr-follower` in the `record_mono_teleop_real.yml` file. | |||

| - Adjust the camera PATH in the `record_mono_teleop_real.yml` file. | |||

| - Adjust image and video WIDTH and HEIGHT in the `record_mono_teleop_real.yml` file, if needed. | |||

| - Adjust recording framerate with your camera framerate in the `record_mono_teleop_real.yml` file. | |||

| - Adjust CONFIG path environment variables in the `record_mono_teleop_real.yml` file for both arms if needed. | |||

| - Adjust `LEADER_CONTROL` and `FOLLOWER_CONTROL` environment variables in the `record_mono_teleop_real.yml` file if | |||

| needed. | |||

| You can now start the Dora pipeline to record episodes for LeRobot: | |||

| ```bash | |||

| cd dora-lerobot | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| dora build ./robots/alexk-lcr/graphs/record_mono_teleop_real.yml # Only the first time, it will install all the requirements if needed | |||

| dora up | |||

| dora start ./robots/alexk-lcr/graphs/record_mono_teleop_real.yml | |||

| ``` | |||

| Then, you can tele operate the follower with the leader. A window will pop up showing the camera feed, and some text. | |||

| 1. Press space to start/stop recording | |||

| 2. Press return if you want to tell the recording that you failed the current episode, or the previous episode if you | |||

| have not started the current one | |||

| 3. Close the window to stop the recording | |||

| 4. Write down the location of the logs (e.g `018fc3a8-3b76-70f5-84a2-22b84df24739`), this is where the | |||

| dataset (and logs) are stored. | |||

| You can now use our script to convert the logs to an understandable dataset: | |||

| ```bash | |||

| cd dora-lerobot | |||

| # If you are using a custom environment, you will have to activate it before running the command | |||

| source [your_custom_env_bin]/activate | |||

| # If you followed the installation instructions, you can run the following command | |||

| source venv/bin/activate # On Linux | |||

| source venv/Scripts/activate # On Windows bash | |||

| venv\Scripts\activate.bat # On Windows cmd | |||

| venv\Scripts\activate.ps1 # On Windows PowerShell | |||

| python ./datasets/build_dataset.py --record-path [path_to_recorded_logs] --dataset-name [dataset_name] --framerate [framerate] | |||

| ``` | |||

| **Note:** On default, the framerate is 30. If you have recorded with a different framerate, you will have to adjust it. | |||

| ## The dora graph | |||

| [](https://mermaid.live/edit#pako:eNqdVMFu2zAM_RVB57berjn0MOzaU3eLCoGR6ESobBqSnK4o-u-j5NizE6Np64NBPfE9Us-03qQhi3IjxempPb2YA4Qk_vxSrRDeBO0RLIZx5dqEoSMPCUeoJs-0IYU6bM1RG2gwQAaOziJpBmkUwUA7SjqgoWAzYinAabmtZgulnlQb-90-QHcQWuuyp7XY5uApU-e7yXHN0zsnlahkDSWqAlSN897F6uePrVJTXJU8bLmf8jpvU9zeiuTMs4Aout573VF0yVHLG_crLo2WZN6UytwRv-Sv-DpInksM6HWBi_75YFPy_JN99aQL7rJwJu8JZiRWeQkuoV7C3-jDNbDHQryYsZWzdi7ywGXyKeQ2Lf4t_IuRXAhm-v9an83lQiXgj1an4Xirc34-hNMx1ymf8RfMZOn85_m6L1fZNTiPtsxxifRVjYV9C7doFzEcIbd-V8B4x57qvltt5YNfai4U02DSQkDeSLa8AWf5onvLckqmAzao5IZDC-FZSdW-cx70iR5fWyM3KfR4IwP1-4Pc1OAjr_rOsvxvB3zlNBOK1iUKD8M9Wq7T93-SiOfx) | |||

| ## License | |||

| This library is licensed under the [Apache License 2.0](../../LICENSE). | |||

+ 135

- 0

examples/alexk-lcr/assets/simulation/lift_cube.xml

View File

| @@ -0,0 +1,135 @@ | |||

| <mujoco model="bd1 scene"> | |||

| <option timestep="0.005"/> | |||

| <compiler angle="radian" autolimits="true"/> | |||

| <asset> | |||

| <mesh name="base" file="base.stl"/> | |||

| <mesh name="dc11_a01_spacer_dummy" file="dc11_a01_spacer_dummy.stl"/> | |||

| <mesh name="dc11_a01_dummy" file="dc11_a01_dummy.stl"/> | |||

| <mesh name="rotation_connector" file="rotation_connector.stl"/> | |||

| <mesh name="arm_connector" file="arm_connector.stl"/> | |||

| <mesh name="dc15_a01_horn_idle2_dummy" file="dc15_a01_horn_idle2_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_m_dummy" file="dc15_a01_case_m_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_f_dummy" file="dc15_a01_case_f_dummy.stl"/> | |||

| <mesh name="dc15_a01_horn_dummy" file="dc15_a01_horn_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_b_dummy" file="dc15_a01_case_b_dummy.stl"/> | |||

| <mesh name="connector" file="connector.stl"/> | |||

| <mesh name="shoulder_rotation" file="shoulder_rotation.stl"/> | |||

| <mesh name="static_side" file="static_side.stl"/> | |||

| <mesh name="moving_side" file="moving_side.stl"/> | |||

| <texture type="skybox" builtin="gradient" rgb1="0.3 0.5 0.7" rgb2="0 0 0" width="512" height="3072" /> | |||

| <texture type="2d" name="groundplane" builtin="checker" mark="edge" rgb1="0.2 0.3 0.4" rgb2="0.1 0.2 0.3" markrgb="0.8 0.8 0.8" width="300" height="300" /> | |||

| <material name="groundplane" texture="groundplane" texuniform="true" texrepeat="5 5" reflectance="0.2" /> | |||

| </asset> | |||

| <visual> | |||

| <headlight diffuse="0.6 0.6 0.6" ambient="0.3 0.3 0.3" specular="0 0 0" /> | |||

| <rgba haze="0.15 0.25 0.35 1" /> | |||

| <global azimuth="150" elevation="-20" offheight="640" /> | |||

| </visual> | |||

| <worldbody> | |||

| <light pos="0 0 3" dir="0 0 -1" directional="false" /> | |||

| <body name="floor"> | |||

| <geom pos="0 0 0" name="floor" size="0 0 .125" type="plane" material="groundplane" conaffinity="1" contype="1" /> | |||

| </body> | |||

| <body name="cube" pos="0.1 0.1 0.01"> | |||

| <freejoint name="cube"/> | |||

| <inertial pos="0 0 0" mass="0.1" diaginertia="0.00001125 0.00001125 0.00001125"/> | |||

| <geom friction="0.5" condim="3" pos="0 0 0" size="0.015 0.015 0.015" type="box" name="cube" rgba="0.5 0 0 1" priority="1"/> | |||

| </body> | |||

| <camera name="camera_front" pos="0.049 0.888 0.317" xyaxes="-0.998 0.056 -0.000 -0.019 -0.335 0.942"/> | |||

| <camera name="camera_top" pos="0 0 1" euler="0 0 0" mode="fixed"/> | |||

| <camera name="camera_vizu" pos="-0.1 0.6 0.3" quat="-0.15 -0.1 0.6 1"/> | |||

| <geom pos="0.0401555 -0.0353754 -0.0242427" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="base"/> | |||

| <geom pos="0.0511555 0.0406246 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 -0.0184427" quat="0 0 1 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0401555 0.0326246 -0.0042427" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 -0.0184427" quat="0 0.707107 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 0.0099573" quat="0.707107 0 0 -0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.0406246 -0.0184427" quat="0 0.707107 -0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 -0.0184427" quat="0 -1 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 0.0099573" quat="0.707107 0 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch1_assembly" pos="0.0401555 0.0326246 0.0166573"> | |||

| <inertial pos="-0.000767103 -0.0121505 0.0134241" quat="0.498429 0.53272 -0.473938 0.493113" mass="0.0606831" diaginertia="1.86261e-05 1.72746e-05 1.11693e-05"/> | |||

| <joint name="shoulder_pan_joint" pos="0 0 0" axis="0 0 1" range="-3.14159 3.14159" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 -0.0209" type="mesh" rgba="0.231373 0.380392 0.705882 1" mesh="rotation_connector"/> | |||

| <geom pos="-0.014 0.008 0.0264" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0044" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0264" quat="0.707107 0 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0002 0 0.0154" quat="0.707107 0 -0.707107 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0044" quat="0.5 0.5 0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 0.008 0.0044" quat="0.5 0.5 -0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0264" quat="0.5 -0.5 0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0044" quat="0 0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0264" quat="0.5 -0.5 -0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch2_assembly" pos="-0.0188 0 0.0154" quat="0 0.707107 0 0.707107"> | |||

| <inertial pos="0.0766242 -0.00031229 0.0187402" quat="0.52596 0.513053 0.489778 0.469319" mass="0.0432446" diaginertia="7.21796e-05 7.03107e-05 1.07533e-05"/> | |||

| <joint name="shoulder_lift_joint" pos="0 0 0" axis="0 0 1" range="-1.5708 1.22173" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 0.019" quat="0.5 -0.5 -0.5 -0.5" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="arm_connector"/> | |||

| <geom pos="0.1083 -0.0148 0.03035" quat="1 0 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.00715" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.03025" quat="0 -1 0 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="pitch3_assembly" pos="0.1083 -0.0148 0.00425" quat="0.707107 0 0 0.707107"> | |||

| <inertial pos="-0.0551014 -0.00287792 0.0144813" quat="0.500323 0.499209 0.499868 0.5006" mass="0.0788335" diaginertia="6.80912e-05 6.45748e-05 9.84479e-06"/> | |||

| <joint name="elbow_flex_joint" pos="0 0 0" axis="0 0 1" range="-1.48353 1.74533" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00863031 0.00847376 0.0145" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="connector"/> | |||

| <geom pos="-0.100476 -0.00269986 0.02635" quat="0.707107 0 0 -0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00675" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00675" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00315" quat="0.5 -0.5 -0.5 0.5" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.02625" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="effector_roll_assembly" pos="-0.100476 -0.00269986 0.02925" quat="0 -0.707107 -0.707107 0"> | |||

| <inertial pos="-1.65017e-05 -0.02659 0.0195388" quat="0.936813 0.349829 -0.00055331 -0.000300569" mass="0.0240506" diaginertia="6.03208e-06 4.12894e-06 3.3522e-06"/> | |||

| <joint name="wrist_flex_joint" pos="0 0 0" axis="0 0 1" range="-1.91986 1.91986" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.0109998 -0.0190002 0.039" quat="0.707107 -0.707107 0 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="shoulder_rotation"/> | |||

| <geom pos="-7.44154e-06 -0.0385002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0385002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0421002 0.0133967" quat="0 1 0 0" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0190002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="gripper_assembly" pos="-7.44154e-06 -0.0450002 0.0133967" quat="0.5 -0.5 -0.5 -0.5"> | |||

| <inertial pos="-0.00548595 -0.000433143 -0.0190793" quat="0.700194 0.164851 0.167361 0.674197" mass="0.0360627" diaginertia="1.3261e-05 1.231e-05 5.3532e-06"/> | |||

| <joint name="wrist_roll_joint" pos="0 0 0" axis="0 0 1" range="-2.96706 2.96706" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00075 -0.01475 -0.02" quat="0.707107 -0.707107 0 0" type="mesh" rgba="0.917647 0.917647 0.917647 1" mesh="static_side"/> | |||

| <geom pos="0.00755 0.01135 -0.013" quat="0.5 -0.5 0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="0.00755 -0.00825 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="0.00755 -0.00825 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="0.00755 -0.01185 -0.013" quat="0 -0.707107 0 -0.707107" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="0.00755 0.01125 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="moving_side" pos="0.00755 -0.01475 -0.013" quat="0.707107 -0.707107 0 0"> | |||

| <inertial pos="-0.000395599 0.022415 0.0145636" quat="0.722353 0.689129 0.0389102 0.0423547" mass="0.0089856" diaginertia="3.28451e-06 2.24898e-06 1.41539e-06"/> | |||

| <joint name="gripper_joint" pos="0 0 0" axis="0 0 1" range="-1.74533 0.0523599" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00838199 -0.000256591 -0.003" type="mesh" rgba="0.768627 0.886275 0.952941 1" mesh="moving_side"/> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </worldbody> | |||

| <actuator> | |||

| <position joint="shoulder_pan_joint" ctrlrange="-3.14159 3.14159" ctrllimited="true" kp="20" /> | |||

| <position joint="shoulder_lift_joint" ctrlrange="-1.5708 1.22173" ctrllimited="true" kp="20" /> | |||

| <position joint="elbow_flex_joint" ctrlrange="-1.48353 1.74533" ctrllimited="true" kp="20" /> | |||

| <position joint="wrist_flex_joint" ctrlrange="-1.91986 1.91986" ctrllimited="true" kp="20" /> | |||

| <position joint="wrist_roll_joint" ctrlrange="-2.96706 2.96706" ctrllimited="true" kp="20" /> | |||

| <position joint="gripper_joint" ctrlrange="-1.74533 0.0523599" ctrllimited="true" kp="20" /> | |||

| </actuator> | |||

| </mujoco> | |||

+ 135

- 0

examples/alexk-lcr/assets/simulation/pick_place_cube.xml

View File

| @@ -0,0 +1,135 @@ | |||

| <mujoco model="bd1 scene"> | |||

| <option timestep="0.005"/> | |||

| <compiler angle="radian" autolimits="true"/> | |||

| <asset> | |||

| <mesh name="base" file="base.stl"/> | |||

| <mesh name="dc11_a01_spacer_dummy" file="dc11_a01_spacer_dummy.stl"/> | |||

| <mesh name="dc11_a01_dummy" file="dc11_a01_dummy.stl"/> | |||

| <mesh name="rotation_connector" file="rotation_connector.stl"/> | |||

| <mesh name="arm_connector" file="arm_connector.stl"/> | |||

| <mesh name="dc15_a01_horn_idle2_dummy" file="dc15_a01_horn_idle2_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_m_dummy" file="dc15_a01_case_m_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_f_dummy" file="dc15_a01_case_f_dummy.stl"/> | |||

| <mesh name="dc15_a01_horn_dummy" file="dc15_a01_horn_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_b_dummy" file="dc15_a01_case_b_dummy.stl"/> | |||

| <mesh name="connector" file="connector.stl"/> | |||

| <mesh name="shoulder_rotation" file="shoulder_rotation.stl"/> | |||

| <mesh name="static_side" file="static_side.stl"/> | |||

| <mesh name="moving_side" file="moving_side.stl"/> | |||

| <texture type="skybox" builtin="gradient" rgb1="0.3 0.5 0.7" rgb2="0 0 0" width="512" height="3072" /> | |||

| <texture type="2d" name="groundplane" builtin="checker" mark="edge" rgb1="0.2 0.3 0.4" rgb2="0.1 0.2 0.3" markrgb="0.8 0.8 0.8" width="300" height="300" /> | |||

| <material name="groundplane" texture="groundplane" texuniform="true" texrepeat="5 5" reflectance="0.2" /> | |||

| </asset> | |||

| <visual> | |||

| <headlight diffuse="0.6 0.6 0.6" ambient="0.3 0.3 0.3" specular="0 0 0" /> | |||

| <rgba haze="0.15 0.25 0.35 1" /> | |||

| <global azimuth="150" elevation="-20" offheight="640" /> | |||

| </visual> | |||

| <worldbody> | |||

| <light pos="0 0 3" dir="0 0 -1" directional="false" /> | |||

| <body name="floor"> | |||

| <geom pos="0 0 0" name="floor" size="0 0 .125" type="plane" material="groundplane" conaffinity="1" contype="1" /> | |||

| </body> | |||

| <body name="cube" pos="0.1 0.1 0.01"> | |||

| <freejoint name="cube"/> | |||

| <inertial pos="0 0 0" mass="0.1" diaginertia="0.00001125 0.00001125 0.00001125"/> | |||

| <geom friction="0.5" condim="3" pos="0 0 0" size="0.015 0.015 0.015" type="box" name="cube" rgba="0.5 0 0 1" priority="1"/> | |||

| </body> | |||

| <camera name="camera_front" pos="0.049 0.888 0.317" xyaxes="-0.998 0.056 -0.000 -0.019 -0.335 0.942"/> | |||

| <camera name="camera_top" pos="0 0 1" euler="0 0 0" mode="fixed"/> | |||

| <camera name="camera_vizu" pos="-0.1 0.6 0.3" quat="-0.15 -0.1 0.6 1"/> | |||

| <geom pos="0.0401555 -0.0353754 -0.0242427" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="base"/> | |||

| <geom pos="0.0511555 0.0406246 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 -0.0184427" quat="0 0 1 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0401555 0.0326246 -0.0042427" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 -0.0184427" quat="0 0.707107 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 0.0099573" quat="0.707107 0 0 -0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.0406246 -0.0184427" quat="0 0.707107 -0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 -0.0184427" quat="0 -1 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 0.0099573" quat="0.707107 0 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch1_assembly" pos="0.0401555 0.0326246 0.0166573"> | |||

| <inertial pos="-0.000767103 -0.0121505 0.0134241" quat="0.498429 0.53272 -0.473938 0.493113" mass="0.0606831" diaginertia="1.86261e-05 1.72746e-05 1.11693e-05"/> | |||

| <joint name="shoulder_pan_joint" pos="0 0 0" axis="0 0 1" range="-3.14159 3.14159" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 -0.0209" type="mesh" rgba="0.231373 0.380392 0.705882 1" mesh="rotation_connector"/> | |||

| <geom pos="-0.014 0.008 0.0264" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0044" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0264" quat="0.707107 0 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0002 0 0.0154" quat="0.707107 0 -0.707107 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0044" quat="0.5 0.5 0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 0.008 0.0044" quat="0.5 0.5 -0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0264" quat="0.5 -0.5 0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0044" quat="0 0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0264" quat="0.5 -0.5 -0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch2_assembly" pos="-0.0188 0 0.0154" quat="0 0.707107 0 0.707107"> | |||

| <inertial pos="0.0766242 -0.00031229 0.0187402" quat="0.52596 0.513053 0.489778 0.469319" mass="0.0432446" diaginertia="7.21796e-05 7.03107e-05 1.07533e-05"/> | |||

| <joint name="shoulder_lift_joint" pos="0 0 0" axis="0 0 1" range="-1.5708 1.22173" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 0.019" quat="0.5 -0.5 -0.5 -0.5" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="arm_connector"/> | |||

| <geom pos="0.1083 -0.0148 0.03035" quat="1 0 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.00715" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.03025" quat="0 -1 0 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="pitch3_assembly" pos="0.1083 -0.0148 0.00425" quat="0.707107 0 0 0.707107"> | |||

| <inertial pos="-0.0551014 -0.00287792 0.0144813" quat="0.500323 0.499209 0.499868 0.5006" mass="0.0788335" diaginertia="6.80912e-05 6.45748e-05 9.84479e-06"/> | |||

| <joint name="elbow_flex_joint" pos="0 0 0" axis="0 0 1" range="-1.48353 1.74533" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00863031 0.00847376 0.0145" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="connector"/> | |||

| <geom pos="-0.100476 -0.00269986 0.02635" quat="0.707107 0 0 -0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00675" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00675" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00315" quat="0.5 -0.5 -0.5 0.5" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.02625" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="effector_roll_assembly" pos="-0.100476 -0.00269986 0.02925" quat="0 -0.707107 -0.707107 0"> | |||

| <inertial pos="-1.65017e-05 -0.02659 0.0195388" quat="0.936813 0.349829 -0.00055331 -0.000300569" mass="0.0240506" diaginertia="6.03208e-06 4.12894e-06 3.3522e-06"/> | |||

| <joint name="wrist_flex_joint" pos="0 0 0" axis="0 0 1" range="-1.91986 1.91986" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.0109998 -0.0190002 0.039" quat="0.707107 -0.707107 0 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="shoulder_rotation"/> | |||

| <geom pos="-7.44154e-06 -0.0385002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0385002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0421002 0.0133967" quat="0 1 0 0" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0190002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="gripper_assembly" pos="-7.44154e-06 -0.0450002 0.0133967" quat="0.5 -0.5 -0.5 -0.5"> | |||

| <inertial pos="-0.00548595 -0.000433143 -0.0190793" quat="0.700194 0.164851 0.167361 0.674197" mass="0.0360627" diaginertia="1.3261e-05 1.231e-05 5.3532e-06"/> | |||

| <joint name="wrist_roll_joint" pos="0 0 0" axis="0 0 1" range="-2.96706 2.96706" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00075 -0.01475 -0.02" quat="0.707107 -0.707107 0 0" type="mesh" rgba="0.917647 0.917647 0.917647 1" mesh="static_side"/> | |||

| <geom pos="0.00755 0.01135 -0.013" quat="0.5 -0.5 0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="0.00755 -0.00825 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="0.00755 -0.00825 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="0.00755 -0.01185 -0.013" quat="0 -0.707107 0 -0.707107" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="0.00755 0.01125 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="moving_side" pos="0.00755 -0.01475 -0.013" quat="0.707107 -0.707107 0 0"> | |||

| <inertial pos="-0.000395599 0.022415 0.0145636" quat="0.722353 0.689129 0.0389102 0.0423547" mass="0.0089856" diaginertia="3.28451e-06 2.24898e-06 1.41539e-06"/> | |||

| <joint name="gripper_joint" pos="0 0 0" axis="0 0 1" range="-1.74533 0.0523599" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00838199 -0.000256591 -0.003" type="mesh" rgba="0.768627 0.886275 0.952941 1" mesh="moving_side"/> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </worldbody> | |||

| <actuator> | |||

| <position joint="shoulder_pan_joint" ctrlrange="-3.14159 3.14159" ctrllimited="true" kp="20" /> | |||

| <position joint="shoulder_lift_joint" ctrlrange="-1.5708 1.22173" ctrllimited="true" kp="20" /> | |||

| <position joint="elbow_flex_joint" ctrlrange="-1.48353 1.74533" ctrllimited="true" kp="20" /> | |||

| <position joint="wrist_flex_joint" ctrlrange="-1.91986 1.91986" ctrllimited="true" kp="20" /> | |||

| <position joint="wrist_roll_joint" ctrlrange="-2.96706 2.96706" ctrllimited="true" kp="20" /> | |||

| <position joint="gripper_joint" ctrlrange="-1.74533 0.0523599" ctrllimited="true" kp="20" /> | |||

| </actuator> | |||

| </mujoco> | |||

+ 137

- 0

examples/alexk-lcr/assets/simulation/push_cube.xml

View File

| @@ -0,0 +1,137 @@ | |||

| <mujoco model="bd1 scene"> | |||

| <option timestep="0.005"/> | |||

| <compiler angle="radian" autolimits="true"/> | |||

| <asset> | |||

| <mesh name="base" file="base.stl"/> | |||

| <mesh name="dc11_a01_spacer_dummy" file="dc11_a01_spacer_dummy.stl"/> | |||

| <mesh name="dc11_a01_dummy" file="dc11_a01_dummy.stl"/> | |||

| <mesh name="rotation_connector" file="rotation_connector.stl"/> | |||

| <mesh name="arm_connector" file="arm_connector.stl"/> | |||

| <mesh name="dc15_a01_horn_idle2_dummy" file="dc15_a01_horn_idle2_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_m_dummy" file="dc15_a01_case_m_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_f_dummy" file="dc15_a01_case_f_dummy.stl"/> | |||

| <mesh name="dc15_a01_horn_dummy" file="dc15_a01_horn_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_b_dummy" file="dc15_a01_case_b_dummy.stl"/> | |||

| <mesh name="connector" file="connector.stl"/> | |||

| <mesh name="shoulder_rotation" file="shoulder_rotation.stl"/> | |||

| <mesh name="static_side" file="static_side.stl"/> | |||

| <mesh name="moving_side" file="moving_side.stl"/> | |||

| <texture type="skybox" builtin="gradient" rgb1="0.3 0.5 0.7" rgb2="0 0 0" width="512" height="3072" /> | |||

| <texture type="2d" name="groundplane" builtin="checker" mark="edge" rgb1="0.2 0.3 0.4" rgb2="0.1 0.2 0.3" markrgb="0.8 0.8 0.8" width="300" height="300" /> | |||

| <material name="groundplane" texture="groundplane" texuniform="true" texrepeat="5 5" reflectance="0.2" /> | |||

| </asset> | |||

| <visual> | |||

| <headlight diffuse="0.6 0.6 0.6" ambient="0.3 0.3 0.3" specular="0 0 0" /> | |||

| <rgba haze="0.15 0.25 0.35 1" /> | |||

| <global azimuth="150" elevation="-20" offheight="640" /> | |||

| </visual> | |||

| <worldbody> | |||

| <light pos="0 0 3" dir="0 0 -1" directional="false" /> | |||

| <body name="floor"> | |||

| <geom pos="0 0 0" name="floor" size="0 0 .125" type="plane" material="groundplane" conaffinity="1" contype="1" /> | |||

| </body> | |||

| <body name="cube" pos="0.1 0.1 0.01"> | |||

| <freejoint name="cube"/> | |||

| <inertial pos="0 0 0" mass="0.1" diaginertia="0.00001125 0.00001125 0.00001125"/> | |||

| <geom friction="0.5" condim="3" pos="0 0 0" size="0.015 0.015 0.015" type="box" name="cube" rgba="0.5 0 0 1" priority="1"/> | |||

| </body> | |||

| <camera name="camera_front" pos="0.049 0.888 0.317" xyaxes="-0.998 0.056 -0.000 -0.019 -0.335 0.942"/> | |||

| <camera name="camera_top" pos="0 0 1" euler="0 0 0" mode="fixed"/> | |||

| <camera name="camera_vizu" pos="-0.1 0.6 0.3" quat="-0.15 -0.1 0.6 1"/> | |||

| <geom pos="0.0401555 -0.0353754 -0.0242427" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="base"/> | |||

| <geom pos="0.0511555 0.0406246 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 -0.0184427" quat="0 0 1 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0401555 0.0326246 -0.0042427" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 -0.0184427" quat="0 0.707107 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 0.0099573" quat="0.707107 0 0 -0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.0406246 -0.0184427" quat="0 0.707107 -0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 -0.0184427" quat="0 -1 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 0.0099573" quat="0.707107 0 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch1_assembly" pos="0.0401555 0.0326246 0.0166573"> | |||

| <inertial pos="-0.000767103 -0.0121505 0.0134241" quat="0.498429 0.53272 -0.473938 0.493113" mass="0.0606831" diaginertia="1.86261e-05 1.72746e-05 1.11693e-05"/> | |||

| <joint name="shoulder_pan_joint" pos="0 0 0" axis="0 0 1" range="-3.14159 3.14159" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 -0.0209" type="mesh" rgba="0.231373 0.380392 0.705882 1" mesh="rotation_connector"/> | |||

| <geom pos="-0.014 0.008 0.0264" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0044" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0264" quat="0.707107 0 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0002 0 0.0154" quat="0.707107 0 -0.707107 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0044" quat="0.5 0.5 0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 0.008 0.0044" quat="0.5 0.5 -0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0264" quat="0.5 -0.5 0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0044" quat="0 0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0264" quat="0.5 -0.5 -0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch2_assembly" pos="-0.0188 0 0.0154" quat="0 0.707107 0 0.707107"> | |||

| <inertial pos="0.0766242 -0.00031229 0.0187402" quat="0.52596 0.513053 0.489778 0.469319" mass="0.0432446" diaginertia="7.21796e-05 7.03107e-05 1.07533e-05"/> | |||

| <joint name="shoulder_lift_joint" pos="0 0 0" axis="0 0 1" range="-1.5708 1.22173" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 0.019" quat="0.5 -0.5 -0.5 -0.5" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="arm_connector"/> | |||

| <geom pos="0.1083 -0.0148 0.03035" quat="1 0 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.00715" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.03025" quat="0 -1 0 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="pitch3_assembly" pos="0.1083 -0.0148 0.00425" quat="0.707107 0 0 0.707107"> | |||

| <inertial pos="-0.0551014 -0.00287792 0.0144813" quat="0.500323 0.499209 0.499868 0.5006" mass="0.0788335" diaginertia="6.80912e-05 6.45748e-05 9.84479e-06"/> | |||

| <joint name="elbow_flex_joint" pos="0 0 0" axis="0 0 1" range="-1.48353 1.74533" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00863031 0.00847376 0.0145" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="connector"/> | |||

| <geom pos="-0.100476 -0.00269986 0.02635" quat="0.707107 0 0 -0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00675" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00675" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.00315" quat="0.5 -0.5 -0.5 0.5" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="-0.100476 -0.00269986 0.02625" quat="0 -0.707107 0.707107 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="effector_roll_assembly" pos="-0.100476 -0.00269986 0.02925" quat="0 -0.707107 -0.707107 0"> | |||

| <inertial pos="-1.65017e-05 -0.02659 0.0195388" quat="0.936813 0.349829 -0.00055331 -0.000300569" mass="0.0240506" diaginertia="6.03208e-06 4.12894e-06 3.3522e-06"/> | |||

| <joint name="wrist_flex_joint" pos="0 0 0" axis="0 0 1" range="-1.91986 1.91986" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.0109998 -0.0190002 0.039" quat="0.707107 -0.707107 0 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="shoulder_rotation"/> | |||

| <geom pos="-7.44154e-06 -0.0385002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0385002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0421002 0.0133967" quat="0 1 0 0" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="-7.44154e-06 -0.0190002 0.0133967" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="gripper_assembly" pos="-7.44154e-06 -0.0450002 0.0133967" quat="0.5 -0.5 -0.5 -0.5"> | |||

| <inertial pos="-0.00548595 -0.000433143 -0.0190793" quat="0.700194 0.164851 0.167361 0.674197" mass="0.0360627" diaginertia="1.3261e-05 1.231e-05 5.3532e-06"/> | |||

| <joint name="wrist_roll_joint" pos="0 0 0" axis="0 0 1" range="-2.96706 2.96706" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00075 -0.01475 -0.02" quat="0.707107 -0.707107 0 0" type="mesh" rgba="0.917647 0.917647 0.917647 1" mesh="static_side"/> | |||

| <geom pos="0.00755 0.01135 -0.013" quat="0.5 -0.5 0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="0.00755 -0.00825 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="0.00755 -0.00825 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="0.00755 -0.01185 -0.013" quat="0 -0.707107 0 -0.707107" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="0.00755 0.01125 -0.013" quat="0.5 0.5 0.5 -0.5" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="moving_side" pos="0.00755 -0.01475 -0.013" quat="0.707107 -0.707107 0 0"> | |||

| <inertial pos="-0.000395599 0.022415 0.0145636" quat="0.722353 0.689129 0.0389102 0.0423547" mass="0.0089856" diaginertia="3.28451e-06 2.24898e-06 1.41539e-06"/> | |||

| <joint name="gripper_joint" pos="0 0 0" axis="0 0 1" range="-1.74533 0.0523599" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="-0.00838199 -0.000256591 -0.003" type="mesh" rgba="0.768627 0.886275 0.952941 1" mesh="moving_side"/> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| </body> | |||

| <site name="cube_target" pos="1 0 0" size="0.01" rgba="1 0 0 1" /> | |||

| </worldbody> | |||

| <actuator> | |||

| <position joint="shoulder_pan_joint" ctrlrange="-3.14159 3.14159" ctrllimited="true" kp="20" /> | |||

| <position joint="shoulder_lift_joint" ctrlrange="-1.5708 1.22173" ctrllimited="true" kp="20" /> | |||

| <position joint="elbow_flex_joint" ctrlrange="-1.48353 1.74533" ctrllimited="true" kp="20" /> | |||

| <position joint="wrist_flex_joint" ctrlrange="-1.91986 1.91986" ctrllimited="true" kp="20" /> | |||

| <position joint="wrist_roll_joint" ctrlrange="-2.96706 2.96706" ctrllimited="true" kp="20" /> | |||

| <position joint="gripper_joint" ctrlrange="-1.74533 0.0523599" ctrllimited="true" kp="20" /> | |||

| </actuator> | |||

| </mujoco> | |||

+ 135

- 0

examples/alexk-lcr/assets/simulation/reach_cube.xml

View File

| @@ -0,0 +1,135 @@ | |||

| <mujoco model="bd1 scene"> | |||

| <option timestep="0.005"/> | |||

| <compiler angle="radian" autolimits="true"/> | |||

| <asset> | |||

| <mesh name="base" file="base.stl"/> | |||

| <mesh name="dc11_a01_spacer_dummy" file="dc11_a01_spacer_dummy.stl"/> | |||

| <mesh name="dc11_a01_dummy" file="dc11_a01_dummy.stl"/> | |||

| <mesh name="rotation_connector" file="rotation_connector.stl"/> | |||

| <mesh name="arm_connector" file="arm_connector.stl"/> | |||

| <mesh name="dc15_a01_horn_idle2_dummy" file="dc15_a01_horn_idle2_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_m_dummy" file="dc15_a01_case_m_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_f_dummy" file="dc15_a01_case_f_dummy.stl"/> | |||

| <mesh name="dc15_a01_horn_dummy" file="dc15_a01_horn_dummy.stl"/> | |||

| <mesh name="dc15_a01_case_b_dummy" file="dc15_a01_case_b_dummy.stl"/> | |||

| <mesh name="connector" file="connector.stl"/> | |||

| <mesh name="shoulder_rotation" file="shoulder_rotation.stl"/> | |||

| <mesh name="static_side" file="static_side.stl"/> | |||

| <mesh name="moving_side" file="moving_side.stl"/> | |||

| <texture type="skybox" builtin="gradient" rgb1="0.3 0.5 0.7" rgb2="0 0 0" width="512" height="3072" /> | |||

| <texture type="2d" name="groundplane" builtin="checker" mark="edge" rgb1="0.2 0.3 0.4" rgb2="0.1 0.2 0.3" markrgb="0.8 0.8 0.8" width="300" height="300" /> | |||

| <material name="groundplane" texture="groundplane" texuniform="true" texrepeat="5 5" reflectance="0.2" /> | |||

| </asset> | |||

| <visual> | |||

| <headlight diffuse="0.6 0.6 0.6" ambient="0.3 0.3 0.3" specular="0 0 0" /> | |||

| <rgba haze="0.15 0.25 0.35 1" /> | |||

| <global azimuth="150" elevation="-20" offheight="640" /> | |||

| </visual> | |||

| <worldbody> | |||

| <light pos="0 0 3" dir="0 0 -1" directional="false" /> | |||

| <body name="floor"> | |||

| <geom pos="0 0 0" name="floor" size="0 0 .125" type="plane" material="groundplane" conaffinity="1" contype="1" /> | |||

| </body> | |||

| <body name="cube" pos="0.1 0.1 0.01"> | |||

| <freejoint name="cube"/> | |||

| <inertial pos="0 0 0" mass="0.1" diaginertia="0.00001125 0.00001125 0.00001125"/> | |||

| <geom friction="0.5" condim="3" pos="0 0 0" size="0.015 0.015 0.015" type="box" name="cube" rgba="0.5 0 0 1" priority="1"/> | |||

| </body> | |||

| <camera name="camera_front" pos="0.049 0.888 0.317" xyaxes="-0.998 0.056 -0.000 -0.019 -0.335 0.942"/> | |||

| <camera name="camera_top" pos="0 0 1" euler="0 0 0" mode="fixed"/> | |||

| <camera name="camera_vizu" pos="-0.1 0.6 0.3" quat="-0.15 -0.1 0.6 1"/> | |||

| <geom pos="0.0401555 -0.0353754 -0.0242427" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="base"/> | |||

| <geom pos="0.0511555 0.0406246 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 0.0099573" quat="0 0 0 1" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 -0.0184427" quat="0 0 1 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0401555 0.0326246 -0.0042427" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0291555 0.000624643 -0.0184427" quat="0 0.707107 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 0.0099573" quat="0.707107 0 0 -0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.0406246 -0.0184427" quat="0 0.707107 -0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0291555 0.0406246 -0.0184427" quat="0 -1 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0511555 0.000624643 0.0099573" quat="0.707107 0 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch1_assembly" pos="0.0401555 0.0326246 0.0166573"> | |||

| <inertial pos="-0.000767103 -0.0121505 0.0134241" quat="0.498429 0.53272 -0.473938 0.493113" mass="0.0606831" diaginertia="1.86261e-05 1.72746e-05 1.11693e-05"/> | |||

| <joint name="shoulder_pan_joint" pos="0 0 0" axis="0 0 1" range="-3.14159 3.14159" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 -0.0209" type="mesh" rgba="0.231373 0.380392 0.705882 1" mesh="rotation_connector"/> | |||

| <geom pos="-0.014 0.008 0.0264" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0044" quat="0 -0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0264" quat="0.707107 0 0.707107 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0002 0 0.0154" quat="0.707107 0 -0.707107 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc11_a01_dummy"/> | |||

| <geom pos="0.0144 -0.032 0.0044" quat="0.5 0.5 0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 0.008 0.0044" quat="0.5 0.5 -0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0264" quat="0.5 -0.5 0.5 -0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="0.0144 0.008 0.0044" quat="0 0.707107 0 0.707107" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <geom pos="-0.014 -0.032 0.0264" quat="0.5 -0.5 -0.5 0.5" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc11_a01_spacer_dummy"/> | |||

| <body name="pitch2_assembly" pos="-0.0188 0 0.0154" quat="0 0.707107 0 0.707107"> | |||

| <inertial pos="0.0766242 -0.00031229 0.0187402" quat="0.52596 0.513053 0.489778 0.469319" mass="0.0432446" diaginertia="7.21796e-05 7.03107e-05 1.07533e-05"/> | |||

| <joint name="shoulder_lift_joint" pos="0 0 0" axis="0 0 1" range="-1.5708 1.22173" damping="0.1" actuatorfrcrange="-0.5 0.5" actuatorfrclimited="true"/> | |||

| <geom pos="0 0 0.019" quat="0.5 -0.5 -0.5 -0.5" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="arm_connector"/> | |||

| <geom pos="0.1083 -0.0148 0.03035" quat="1 0 0 0" type="mesh" rgba="0.647059 0.647059 0.647059 1" mesh="dc15_a01_horn_idle2_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.615686 0.811765 0.929412 1" mesh="dc15_a01_case_m_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.01075" quat="0 -1 0 0" type="mesh" rgba="0.980392 0.713726 0.00392157 1" mesh="dc15_a01_case_f_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.00715" quat="0 0 0.707107 -0.707107" type="mesh" rgba="0.972549 0.529412 0.00392157 1" mesh="dc15_a01_horn_dummy"/> | |||

| <geom pos="0.1083 -0.0148 0.03025" quat="0 -1 0 0" type="mesh" rgba="0.498039 0.498039 0.498039 1" mesh="dc15_a01_case_b_dummy"/> | |||

| <body name="pitch3_assembly" pos="0.1083 -0.0148 0.00425" quat="0.707107 0 0 0.707107"> | |||

| <inertial pos="-0.0551014 -0.00287792 0.0144813" quat="0.500323 0.499209 0.499868 0.5006" mass="0.0788335" diaginertia="6.80912e-05 6.45748e-05 9.84479e-06"/> | |||