Failed to extract signature

100 changed files with 8883 additions and 1465 deletions

Split View

Diff Options

-

+8 -8.github/workflows/ci.yml

-

+8 -2.github/workflows/node-hub-ci-cd.yml

-

+16 -16.github/workflows/node_hub_test.sh

-

+1 -0.gitignore

-

+1321 -1025Cargo.lock

-

+30 -23Cargo.toml

-

+73 -0Changelog.md

-

+36 -16README.md

-

+43 -47apis/python/node/dora/cuda.py

-

+1 -0apis/rust/node/src/event_stream/scheduler.rs

-

+20 -19apis/rust/node/src/node/mod.rs

-

+7 -1benches/llms/README.md

-

+2 -0benches/llms/llama_cpp_python.yaml

-

+2 -0benches/llms/mistralrs.yaml

-

+2 -0benches/llms/phi4.yaml

-

+2 -0benches/llms/qwen2.5.yaml

-

+2 -0benches/llms/transformers.yaml

-

+22 -0benches/mllm/pyproject.toml

-

+1 -0binaries/cli/Cargo.toml

-

+91 -21binaries/cli/src/lib.rs

-

+91 -12binaries/daemon/src/lib.rs

-

+42 -11binaries/daemon/src/spawn.rs

-

+9 -5binaries/runtime/src/lib.rs

-

+62 -0examples/av1-encoding/dataflow.yml

-

+68 -0examples/av1-encoding/dataflow_reachy.yml

-

+54 -0examples/av1-encoding/ios-dev.yaml

-

+3 -1examples/benchmark/dataflow.yml

-

+18 -27examples/benchmark/node/src/main.rs

-

+2 -3examples/benchmark/sink/src/main.rs

-

+11 -12examples/cuda-benchmark/demo_receiver.py

-

+8 -10examples/cuda-benchmark/receiver.py

-

+3 -0examples/depth_camera/ios-dev.yaml

-

+11 -0examples/mediapipe/README.md

-

+26 -0examples/mediapipe/realsense-dev.yml

-

+26 -0examples/mediapipe/rgb-dev.yml

-

+250 -0examples/reachy2-remote/dataflow_reachy.yml

-

+76 -0examples/reachy2-remote/parse_bbox.py

-

+62 -0examples/reachy2-remote/parse_point.py

-

+300 -0examples/reachy2-remote/parse_pose.py

-

+135 -0examples/reachy2-remote/parse_whisper.py

-

+42 -0examples/reachy2-remote/whisper-dev.yml

-

+1 -1examples/rerun-viewer/dataflow.yml

-

+10 -4examples/rerun-viewer/run.rs

-

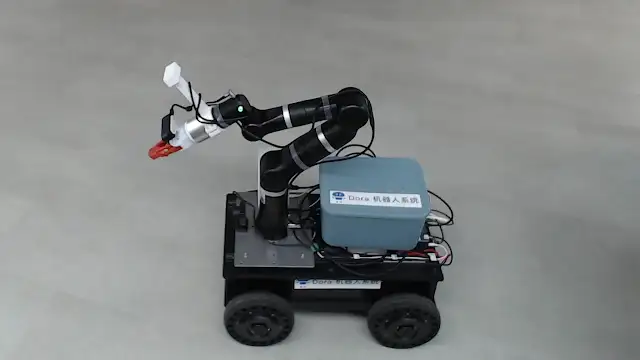

+94 -0examples/so100-remote/README.md

-

+50 -0examples/so100-remote/no_torque.yml

-

+69 -0examples/so100-remote/parse_bbox.py

-

+161 -0examples/so100-remote/parse_pose.py

-

+56 -0examples/so100-remote/parse_whisper.py

-

+180 -0examples/so100-remote/qwenvl-compression.yml

-

+153 -0examples/so100-remote/qwenvl-remote.yml

-

+142 -0examples/so100-remote/qwenvl.yml

-

+51 -0examples/tracker/facebook_cotracker.yml

-

+63 -0examples/tracker/parse_bbox.py

-

+67 -0examples/tracker/qwenvl_cotracker.yml

-

+4 -4libraries/extensions/telemetry/metrics/Cargo.toml

-

+2 -2libraries/extensions/telemetry/metrics/src/lib.rs

-

+73 -28libraries/extensions/telemetry/tracing/src/lib.rs

-

+1 -1node-hub/dora-argotranslate/pyproject.toml

-

+221 -0node-hub/dora-cotracker/README.md

-

+40 -0node-hub/dora-cotracker/demo.yml

-

+11 -0node-hub/dora-cotracker/dora_cotracker/__init__.py

-

+5 -0node-hub/dora-cotracker/dora_cotracker/__main__.py

-

+212 -0node-hub/dora-cotracker/dora_cotracker/main.py

-

+32 -0node-hub/dora-cotracker/pyproject.toml

-

+9 -0node-hub/dora-cotracker/tests/test_dora_cotracker.py

-

+32 -0node-hub/dora-dav1d/Cargo.toml

-

+26 -0node-hub/dora-dav1d/pyproject.toml

-

+219 -0node-hub/dora-dav1d/src/lib.rs

-

+3 -0node-hub/dora-dav1d/src/main.rs

-

+5 -3node-hub/dora-distil-whisper/pyproject.toml

-

+1 -1node-hub/dora-echo/pyproject.toml

-

+15 -14node-hub/dora-internvl/pyproject.toml

-

+85 -39node-hub/dora-ios-lidar/dora_ios_lidar/main.py

-

+2 -2node-hub/dora-ios-lidar/pyproject.toml

-

+1 -1node-hub/dora-keyboard/pyproject.toml

-

+41 -5node-hub/dora-kit-car/README.md

-

+1 -1node-hub/dora-kokoro-tts/pyproject.toml

-

+0 -3node-hub/dora-llama-cpp-python/pyproject.toml

-

+3 -2node-hub/dora-magma/pyproject.toml

-

+40 -0node-hub/dora-mediapipe/README.md

-

+13 -0node-hub/dora-mediapipe/dora_mediapipe/__init__.py

-

+6 -0node-hub/dora-mediapipe/dora_mediapipe/__main__.py

-

+136 -0node-hub/dora-mediapipe/dora_mediapipe/main.py

-

+25 -0node-hub/dora-mediapipe/pyproject.toml

-

+13 -0node-hub/dora-mediapipe/tests/test_dora_mediapipe.py

-

+2252 -0node-hub/dora-mediapipe/uv.lock

-

+1 -1node-hub/dora-microphone/pyproject.toml

-

+1 -1node-hub/dora-object-to-pose/Cargo.toml

-

+0 -2node-hub/dora-object-to-pose/pyproject.toml

-

+56 -45node-hub/dora-object-to-pose/src/lib.rs

-

+1 -1node-hub/dora-openai-server/pyproject.toml

-

+14 -12node-hub/dora-opus/pyproject.toml

-

+4 -5node-hub/dora-opus/tests/test_translate.py

-

+1175 -0node-hub/dora-opus/uv.lock

-

+2 -0node-hub/dora-outtetts/README.md

-

+4 -14node-hub/dora-outtetts/dora_outtetts/tests/test_main.py

-

+1 -1node-hub/dora-outtetts/pyproject.toml

-

+9 -11node-hub/dora-parler/pyproject.toml

-

+1 -1node-hub/dora-phi4/pyproject.toml

-

+1 -1node-hub/dora-piper/pyproject.toml

+ 8

- 8

.github/workflows/ci.yml

View File

| @@ -63,11 +63,11 @@ jobs: | |||

| cache-directories: ${{ env.CARGO_TARGET_DIR }} | |||

| - name: "Check" | |||

| run: cargo check --all | |||

| run: cargo check --all --exclude dora-dav1d --exclude dora-rav1e | |||

| - name: "Build (Without Python dep as it is build with maturin)" | |||

| run: cargo build --all --exclude dora-node-api-python --exclude dora-operator-api-python --exclude dora-ros2-bridge-python | |||

| run: cargo build --all --exclude dora-dav1d --exclude dora-rav1e --exclude dora-node-api-python --exclude dora-operator-api-python --exclude dora-ros2-bridge-python | |||

| - name: "Test" | |||

| run: cargo test --all --exclude dora-node-api-python --exclude dora-operator-api-python --exclude dora-ros2-bridge-python | |||

| run: cargo test --all --exclude dora-dav1d --exclude dora-rav1e --exclude dora-node-api-python --exclude dora-operator-api-python --exclude dora-ros2-bridge-python | |||

| # Run examples as separate job because otherwise we will exhaust the disk | |||

| # space of the GitHub action runners. | |||

| @@ -310,7 +310,7 @@ jobs: | |||

| # Test Rust template Project | |||

| dora new test_rust_project --internal-create-with-path-dependencies | |||

| cd test_rust_project | |||

| cargo build --all | |||

| cargo build --all --exclude dora-dav1d --exclude dora-rav1e | |||

| dora up | |||

| dora list | |||

| dora start dataflow.yml --name ci-rust-test --detach | |||

| @@ -459,12 +459,12 @@ jobs: | |||

| - run: cargo --version --verbose | |||

| - name: "Clippy" | |||

| run: cargo clippy --all | |||

| run: cargo clippy --all --exclude dora-dav1d --exclude dora-rav1e | |||

| - name: "Clippy (tracing feature)" | |||

| run: cargo clippy --all --features tracing | |||

| run: cargo clippy --all --exclude dora-dav1d --exclude dora-rav1e --features tracing | |||

| if: false # only the dora-runtime has this feature, but it is currently commented out | |||

| - name: "Clippy (metrics feature)" | |||

| run: cargo clippy --all --features metrics | |||

| run: cargo clippy --all --exclude dora-dav1d --exclude dora-rav1e --features metrics | |||

| if: false # only the dora-runtime has this feature, but it is currently commented out | |||

| rustfmt: | |||

| @@ -544,4 +544,4 @@ jobs: | |||

| with: | |||

| use-cross: true | |||

| command: check | |||

| args: --target ${{ matrix.platform.target }} --all --exclude dora-node-api-python --exclude dora-operator-api-python --exclude dora-ros2-bridge-python | |||

| args: --target ${{ matrix.platform.target }} --all --exclude dora-dav1d --exclude dora-rav1e --exclude dora-node-api-python --exclude dora-operator-api-python --exclude dora-ros2-bridge-python | |||

+ 8

- 2

.github/workflows/node-hub-ci-cd.yml

View File

| @@ -13,7 +13,7 @@ on: | |||

| jobs: | |||

| find-jobs: | |||

| runs-on: ubuntu-22.04 | |||

| runs-on: ubuntu-24.04 | |||

| name: Find Jobs | |||

| outputs: | |||

| folders: ${{ steps.jobs.outputs.folders }} | |||

| @@ -34,7 +34,7 @@ jobs: | |||

| strategy: | |||

| fail-fast: ${{ github.event_name != 'workflow_dispatch' && !(github.event_name == 'release' && startsWith(github.ref, 'refs/tags/')) }} | |||

| matrix: | |||

| platform: [ubuntu-22.04, macos-14] | |||

| platform: [ubuntu-24.04, macos-14] | |||

| folder: ${{ fromJson(needs.find-jobs.outputs.folders )}} | |||

| steps: | |||

| - name: Checkout repository | |||

| @@ -52,7 +52,12 @@ jobs: | |||

| - name: Install system-level dependencies | |||

| if: runner.os == 'Linux' | |||

| run: | | |||

| sudo apt update | |||

| sudo apt-get install portaudio19-dev | |||

| sudo apt-get install libdav1d-dev nasm libudev-dev | |||

| mkdir -p $HOME/.rustup/toolchains/stable-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/lib | |||

| ln -s /lib/x86_64-linux-gnu/libdav1d.so $HOME/.rustup/toolchains/stable-x86_64-unknown-linux-gnu/lib/rustlib/x86_64-unknown-linux-gnu/lib/libdav1d.so | |||

| # Install mingw-w64 cross-compilers | |||

| sudo apt install g++-mingw-w64-x86-64 gcc-mingw-w64-x86-64 | |||

| @@ -60,6 +65,7 @@ jobs: | |||

| if: runner.os == 'MacOS' && (github.event_name == 'workflow_dispatch' || (github.event_name == 'release' && startsWith(github.ref, 'refs/tags/'))) | |||

| run: | | |||

| brew install portaudio | |||

| brew install dav1d nasm | |||

| - name: Set up Python | |||

| if: runner.os == 'Linux' || github.event_name == 'workflow_dispatch' || (github.event_name == 'release' && startsWith(github.ref, 'refs/tags/')) | |||

+ 16

- 16

.github/workflows/node_hub_test.sh

View File

| @@ -2,10 +2,10 @@ | |||

| set -euo | |||

| # List of ignored modules | |||

| ignored_folders=("dora-parler") | |||

| ignored_folders=("dora-parler" "dora-opus" "dora-internvl" "dora-magma") | |||

| # Skip test | |||

| skip_test_folders=("dora-internvl" "dora-parler" "dora-keyboard" "dora-microphone" "terminal-input") | |||

| skip_test_folders=("dora-internvl" "dora-parler" "dora-keyboard" "dora-microphone" "terminal-input" "dora-magma") | |||

| # Get current working directory | |||

| dir=$(pwd) | |||

| @@ -26,8 +26,8 @@ else | |||

| cargo build | |||

| cargo test | |||

| pip install "maturin[zig]" | |||

| maturin build --zig | |||

| pip install "maturin[zig, patchelf]" | |||

| maturin build --release --compatibility manylinux_2_28 --zig | |||

| # If GITHUB_EVENT_NAME is release or workflow_dispatch, publish the wheel on multiple platforms | |||

| if [ "$GITHUB_EVENT_NAME" == "release" ] || [ "$GITHUB_EVENT_NAME" == "workflow_dispatch" ]; then | |||

| # Free up ubuntu space | |||

| @@ -36,10 +36,10 @@ else | |||

| sudo rm -rf /usr/share/dotnet/ | |||

| sudo rm -rf /opt/ghc/ | |||

| maturin publish --skip-existing --zig | |||

| maturin publish --skip-existing --compatibility manylinux_2_28 --zig | |||

| # aarch64-unknown-linux-gnu | |||

| rustup target add aarch64-unknown-linux-gnu | |||

| maturin publish --target aarch64-unknown-linux-gnu --skip-existing --zig | |||

| maturin publish --target aarch64-unknown-linux-gnu --skip-existing --zig --compatibility manylinux_2_28 | |||

| # armv7-unknown-linux-musleabihf | |||

| rustup target add armv7-unknown-linux-musleabihf | |||

| @@ -53,8 +53,15 @@ else | |||

| fi | |||

| elif [[ -f "Cargo.toml" && -f "pyproject.toml" && "$(uname)" = "Darwin" ]]; then | |||

| pip install "maturin[zig, patchelf]" | |||

| # aarch64-apple-darwin | |||

| maturin build --release | |||

| # If GITHUB_EVENT_NAME is release or workflow_dispatch, publish the wheel | |||

| if [ "$GITHUB_EVENT_NAME" == "release" ] || [ "$GITHUB_EVENT_NAME" == "workflow_dispatch" ]; then | |||

| maturin publish --skip-existing | |||

| fi | |||

| # x86_64-apple-darwin | |||

| pip install "maturin[zig]" | |||

| rustup target add x86_64-apple-darwin | |||

| maturin build --target x86_64-apple-darwin --zig --release | |||

| # If GITHUB_EVENT_NAME is release or workflow_dispatch, publish the wheel | |||

| @@ -62,15 +69,7 @@ else | |||

| maturin publish --target x86_64-apple-darwin --skip-existing --zig | |||

| fi | |||

| # aarch64-apple-darwin | |||

| rustup target add aarch64-apple-darwin | |||

| maturin build --target aarch64-apple-darwin --zig --release | |||

| # If GITHUB_EVENT_NAME is release or workflow_dispatch, publish the wheel | |||

| if [ "$GITHUB_EVENT_NAME" == "release" ] || [ "$GITHUB_EVENT_NAME" == "workflow_dispatch" ]; then | |||

| maturin publish --target aarch64-apple-darwin --skip-existing --zig | |||

| fi | |||

| else | |||

| elif [[ "$(uname)" = "Linux" ]]; then | |||

| if [ -f "$dir/Cargo.toml" ]; then | |||

| echo "Running build and tests for Rust project in $dir..." | |||

| cargo check | |||

| @@ -96,6 +95,7 @@ else | |||

| uv run pytest | |||

| fi | |||

| if [ "$GITHUB_EVENT_NAME" == "release" ] || [ "$GITHUB_EVENT_NAME" == "workflow_dispatch" ]; then | |||

| uv build | |||

| uv publish --check-url https://pypi.org/simple | |||

| fi | |||

| fi | |||

+ 1

- 0

.gitignore

View File

| @@ -11,6 +11,7 @@ examples/**/*.txt | |||

| # Remove hdf and stl files | |||

| *.stl | |||

| *.dae | |||

| *.STL | |||

| *.hdf5 | |||

+ 1321

- 1025

Cargo.lock

File diff suppressed because it is too large

View File

+ 30

- 23

Cargo.toml

View File

| @@ -37,6 +37,9 @@ members = [ | |||

| "node-hub/dora-kit-car", | |||

| "node-hub/dora-object-to-pose", | |||

| "node-hub/dora-mistral-rs", | |||

| "node-hub/dora-rav1e", | |||

| "node-hub/dora-dav1d", | |||

| "node-hub/dora-rustypot", | |||

| "libraries/extensions/ros2-bridge", | |||

| "libraries/extensions/ros2-bridge/msg-gen", | |||

| "libraries/extensions/ros2-bridge/python", | |||

| @@ -47,34 +50,34 @@ members = [ | |||

| [workspace.package] | |||

| edition = "2021" | |||

| # Make sure to also bump `apis/node/python/__init__.py` version. | |||

| version = "0.3.10" | |||

| version = "0.3.11" | |||

| description = "`dora` goal is to be a low latency, composable, and distributed data flow." | |||

| documentation = "https://dora.carsmos.ai" | |||

| documentation = "https://dora-rs.ai" | |||

| license = "Apache-2.0" | |||

| repository = "https://github.com/dora-rs/dora/" | |||

| [workspace.dependencies] | |||

| dora-node-api = { version = "0.3.10", path = "apis/rust/node", default-features = false } | |||

| dora-node-api-python = { version = "0.3.10", path = "apis/python/node", default-features = false } | |||

| dora-operator-api = { version = "0.3.10", path = "apis/rust/operator", default-features = false } | |||

| dora-operator-api-macros = { version = "0.3.10", path = "apis/rust/operator/macros" } | |||

| dora-operator-api-types = { version = "0.3.10", path = "apis/rust/operator/types" } | |||

| dora-operator-api-python = { version = "0.3.10", path = "apis/python/operator" } | |||

| dora-operator-api-c = { version = "0.3.10", path = "apis/c/operator" } | |||

| dora-node-api-c = { version = "0.3.10", path = "apis/c/node" } | |||

| dora-core = { version = "0.3.10", path = "libraries/core" } | |||

| dora-arrow-convert = { version = "0.3.10", path = "libraries/arrow-convert" } | |||

| dora-tracing = { version = "0.3.10", path = "libraries/extensions/telemetry/tracing" } | |||

| dora-metrics = { version = "0.3.10", path = "libraries/extensions/telemetry/metrics" } | |||

| dora-download = { version = "0.3.10", path = "libraries/extensions/download" } | |||

| shared-memory-server = { version = "0.3.10", path = "libraries/shared-memory-server" } | |||

| communication-layer-request-reply = { version = "0.3.10", path = "libraries/communication-layer/request-reply" } | |||

| dora-cli = { version = "0.3.10", path = "binaries/cli" } | |||

| dora-runtime = { version = "0.3.10", path = "binaries/runtime" } | |||

| dora-daemon = { version = "0.3.10", path = "binaries/daemon" } | |||

| dora-coordinator = { version = "0.3.10", path = "binaries/coordinator" } | |||

| dora-ros2-bridge = { version = "0.3.10", path = "libraries/extensions/ros2-bridge" } | |||

| dora-ros2-bridge-msg-gen = { version = "0.3.10", path = "libraries/extensions/ros2-bridge/msg-gen" } | |||

| dora-node-api = { version = "0.3.11", path = "apis/rust/node", default-features = false } | |||

| dora-node-api-python = { version = "0.3.11", path = "apis/python/node", default-features = false } | |||

| dora-operator-api = { version = "0.3.11", path = "apis/rust/operator", default-features = false } | |||

| dora-operator-api-macros = { version = "0.3.11", path = "apis/rust/operator/macros" } | |||

| dora-operator-api-types = { version = "0.3.11", path = "apis/rust/operator/types" } | |||

| dora-operator-api-python = { version = "0.3.11", path = "apis/python/operator" } | |||

| dora-operator-api-c = { version = "0.3.11", path = "apis/c/operator" } | |||

| dora-node-api-c = { version = "0.3.11", path = "apis/c/node" } | |||

| dora-core = { version = "0.3.11", path = "libraries/core" } | |||

| dora-arrow-convert = { version = "0.3.11", path = "libraries/arrow-convert" } | |||

| dora-tracing = { version = "0.3.11", path = "libraries/extensions/telemetry/tracing" } | |||

| dora-metrics = { version = "0.3.11", path = "libraries/extensions/telemetry/metrics" } | |||

| dora-download = { version = "0.3.11", path = "libraries/extensions/download" } | |||

| shared-memory-server = { version = "0.3.11", path = "libraries/shared-memory-server" } | |||

| communication-layer-request-reply = { version = "0.3.11", path = "libraries/communication-layer/request-reply" } | |||

| dora-cli = { version = "0.3.11", path = "binaries/cli" } | |||

| dora-runtime = { version = "0.3.11", path = "binaries/runtime" } | |||

| dora-daemon = { version = "0.3.11", path = "binaries/daemon" } | |||

| dora-coordinator = { version = "0.3.11", path = "binaries/coordinator" } | |||

| dora-ros2-bridge = { version = "0.3.11", path = "libraries/extensions/ros2-bridge" } | |||

| dora-ros2-bridge-msg-gen = { version = "0.3.11", path = "libraries/extensions/ros2-bridge/msg-gen" } | |||

| dora-ros2-bridge-python = { path = "libraries/extensions/ros2-bridge/python" } | |||

| # versioned independently from the other dora crates | |||

| dora-message = { version = "0.4.4", path = "libraries/message" } | |||

| @@ -187,6 +190,10 @@ name = "cxx-ros2-dataflow" | |||

| path = "examples/c++-ros2-dataflow/run.rs" | |||

| required-features = ["ros2-examples"] | |||

| [[example]] | |||

| name = "rerun-viewer" | |||

| path = "examples/rerun-viewer/run.rs" | |||

| # The profile that 'dist' will build with | |||

| [profile.dist] | |||

| inherits = "release" | |||

+ 73

- 0

Changelog.md

View File

| @@ -1,5 +1,78 @@ | |||

| # Changelog | |||

| ## v0.3.11 (2025-04-07) | |||

| ## What's Changed | |||

| - Post dora 0.3.10 release fix by @haixuanTao in https://github.com/dora-rs/dora/pull/804 | |||

| - Add windows release for rust nodes by @haixuanTao in https://github.com/dora-rs/dora/pull/805 | |||

| - Add Node Table into README.md by @haixuanTao in https://github.com/dora-rs/dora/pull/808 | |||

| - update dora yaml json schema validator by @haixuanTao in https://github.com/dora-rs/dora/pull/809 | |||

| - Improve readme support matrix readability by @haixuanTao in https://github.com/dora-rs/dora/pull/810 | |||

| - Clippy automatic fixes applied by @Shar-jeel-Sajid in https://github.com/dora-rs/dora/pull/812 | |||

| - Improve documentation on adding new node to the node-hub by @haixuanTao in https://github.com/dora-rs/dora/pull/820 | |||

| - #807 Fixed by @7SOMAY in https://github.com/dora-rs/dora/pull/818 | |||

| - Applied Ruff pydocstyle to dora by @Mati-ur-rehman-017 in https://github.com/dora-rs/dora/pull/831 | |||

| - Related to dora-bot issue assignment by @MunishMummadi in https://github.com/dora-rs/dora/pull/840 | |||

| - Add dora-lerobot node into dora by @Ignavar in https://github.com/dora-rs/dora/pull/834 | |||

| - CI: Permit issue modifications for issue assign job by @phil-opp in https://github.com/dora-rs/dora/pull/848 | |||

| - Fix: Set variables outside bash script to prevent injection by @phil-opp in https://github.com/dora-rs/dora/pull/849 | |||

| - Replacing Deprecated functions of pyo3 by @Shar-jeel-Sajid in https://github.com/dora-rs/dora/pull/838 | |||

| - Add noise filtering on whisper to be able to use speakers by @haixuanTao in https://github.com/dora-rs/dora/pull/847 | |||

| - Add minimal Dockerfile with Python and uv for easy onboarding by @Krishnadubey1008 in https://github.com/dora-rs/dora/pull/843 | |||

| - More compact readme with example section by @haixuanTao in https://github.com/dora-rs/dora/pull/855 | |||

| - Create docker-image.yml by @haixuanTao in https://github.com/dora-rs/dora/pull/857 | |||

| - Multi platform docker by @haixuanTao in https://github.com/dora-rs/dora/pull/858 | |||

| - change: `dora/node-hub/README.md` by @MunishMummadi in https://github.com/dora-rs/dora/pull/862 | |||

| - Added dora-phi4 inside node-hub by @7SOMAY in https://github.com/dora-rs/dora/pull/861 | |||

| - node-hub: Added dora-magma node by @MunishMummadi in https://github.com/dora-rs/dora/pull/853 | |||

| - Added the dora-llama-cpp-python node by @ShashwatPatil in https://github.com/dora-rs/dora/pull/850 | |||

| - Adding in some missing types and test cases within arrow convert crate by @Ignavar in https://github.com/dora-rs/dora/pull/864 | |||

| - Migrate robots from dora-lerobot to dora repository by @rahat2134 in https://github.com/dora-rs/dora/pull/868 | |||

| - Applied pyupgrade style by @Mati-ur-rehman-017 in https://github.com/dora-rs/dora/pull/876 | |||

| - Adding additional llm in tests by @haixuanTao in https://github.com/dora-rs/dora/pull/873 | |||

| - Dora transformer node by @ShashwatPatil in https://github.com/dora-rs/dora/pull/870 | |||

| - Using macros in Arrow Conversion by @Shar-jeel-Sajid in https://github.com/dora-rs/dora/pull/877 | |||

| - Adding run command within python API by @haixuanTao in https://github.com/dora-rs/dora/pull/875 | |||

| - Added f16 type conversion by @Shar-jeel-Sajid in https://github.com/dora-rs/dora/pull/886 | |||

| - Added "PERF" flag inside node-hub by @7SOMAY in https://github.com/dora-rs/dora/pull/880 | |||

| - Added quality ruff-flags for better code quality by @7SOMAY in https://github.com/dora-rs/dora/pull/888 | |||

| - Add llm benchmark by @haixuanTao in https://github.com/dora-rs/dora/pull/881 | |||

| - Implement `into_vec_f64(&ArrowData) -> Vec<f64)` conversion function by @Shar-jeel-Sajid in https://github.com/dora-rs/dora/pull/893 | |||

| - Adding virtual env within dora build command by @haixuanTao in https://github.com/dora-rs/dora/pull/895 | |||

| - Adding metrics for node api by @haixuanTao in https://github.com/dora-rs/dora/pull/903 | |||

| - Made UI interface for input in dora, using Gradio by @ShashwatPatil in https://github.com/dora-rs/dora/pull/891 | |||

| - Add chinese voice support by @haixuanTao in https://github.com/dora-rs/dora/pull/902 | |||

| - Made conversion generic by @Shar-jeel-Sajid in https://github.com/dora-rs/dora/pull/908 | |||

| - Added husky simulation in Mujoco and gamepad node by @ShashwatPatil in https://github.com/dora-rs/dora/pull/906 | |||

| - use `cargo-dist` tool for dora-cli releases by @Hennzau in https://github.com/dora-rs/dora/pull/916 | |||

| - Implementing Self update by @Shar-jeel-Sajid in https://github.com/dora-rs/dora/pull/920 | |||

| - Fix: RUST_LOG=. dora run bug by @starlitxiling in https://github.com/dora-rs/dora/pull/924 | |||

| - Added dora-mistral-rs node in node-hub for inference in rust by @Ignavar in https://github.com/dora-rs/dora/pull/910 | |||

| - Fix reachy left arm by @haixuanTao in https://github.com/dora-rs/dora/pull/907 | |||

| - Functions for sending and receiving data using Arrow::FFI by @Mati-ur-rehman-017 in https://github.com/dora-rs/dora/pull/918 | |||

| - Adding `recv_async` dora method to retrieve data in python async by @haixuanTao in https://github.com/dora-rs/dora/pull/909 | |||

| - Update: README.md of the node hub by @Choudhry18 in https://github.com/dora-rs/dora/pull/929 | |||

| - Fix magma by @haixuanTao in https://github.com/dora-rs/dora/pull/926 | |||

| - Add support for mask in rerun by @haixuanTao in https://github.com/dora-rs/dora/pull/927 | |||

| - Bump array-init-cursor from 0.2.0 to 0.2.1 by @dependabot in https://github.com/dora-rs/dora/pull/933 | |||

| - Enhance Zenoh Integration Documentation by @NageshMandal in https://github.com/dora-rs/dora/pull/935 | |||

| - Support av1 by @haixuanTao in https://github.com/dora-rs/dora/pull/932 | |||

| - Bump dora v0.3.11 by @haixuanTao in https://github.com/dora-rs/dora/pull/948 | |||

| ## New Contributors | |||

| - @Shar-jeel-Sajid made their first contribution in https://github.com/dora-rs/dora/pull/812 | |||

| - @7SOMAY made their first contribution in https://github.com/dora-rs/dora/pull/818 | |||

| - @Mati-ur-rehman-017 made their first contribution in https://github.com/dora-rs/dora/pull/831 | |||

| - @MunishMummadi made their first contribution in https://github.com/dora-rs/dora/pull/840 | |||

| - @Ignavar made their first contribution in https://github.com/dora-rs/dora/pull/834 | |||

| - @Krishnadubey1008 made their first contribution in https://github.com/dora-rs/dora/pull/843 | |||

| - @ShashwatPatil made their first contribution in https://github.com/dora-rs/dora/pull/850 | |||

| - @rahat2134 made their first contribution in https://github.com/dora-rs/dora/pull/868 | |||

| - @Choudhry18 made their first contribution in https://github.com/dora-rs/dora/pull/929 | |||

| - @NageshMandal made their first contribution in https://github.com/dora-rs/dora/pull/935 | |||

| ## v0.3.10 (2025-03-04) | |||

| ## What's Changed | |||

+ 36

- 16

README.md

View File

| @@ -29,6 +29,9 @@ | |||

| <a href="https://pypi.org/project/dora-rs/"> | |||

| <img src="https://img.shields.io/pypi/v/dora-rs.svg" alt="PyPi Latest Release"/> | |||

| </a> | |||

| <a href="https://github.com/dora-rs/dora/blob/main/LICENSE"> | |||

| <img src="https://img.shields.io/github/license/dora-rs/dora" alt="PyPi Latest Release"/> | |||

| </a> | |||

| </div> | |||

| <div align="center"> | |||

| <a href="https://trendshift.io/repositories/9190" target="_blank"><img src="https://trendshift.io/api/badge/repositories/9190" alt="dora-rs%2Fdora | Trendshift" style="width: 250px; height: 55px;" width="250" height="55"/></a> | |||

| @@ -59,25 +62,30 @@ | |||

| <details open> | |||

| <summary><b>2025</b></summary> | |||

| - \[03/05\] dora-rs has been accepted to [**GSoC 2025 🎉**](https://summerofcode.withgoogle.com/programs/2025/organizations/dora-rs-tb), with the following [**idea list**](https://github.com/dora-rs/dora/wiki/GSoC_2025). | |||

| - \[03/04\] Add support for Zenoh for distributed dataflow. | |||

| - \[03/04\] Add support for Meta SAM2, Kokoro(TTS), Improved Qwen2.5 Performance using `llama.cpp`. | |||

| - \[05/25\] Add support for dora-pytorch-kinematics for fk and ik, dora-mediapipe for pose estimation, dora-rustypot for rust serialport read/write, points2d and points3d visualization in rerun. | |||

| - \[04/25\] Add support for dora-cotracker to track any point on a frame, dora-rav1e AV1 encoding up to 12bit and dora-dav1d AV1 decoding, | |||

| - \[03/25\] Add support for dora async Python. | |||

| - \[03/25\] Add support for Microsoft Phi4, Microsoft Magma. | |||

| - \[03/25\] dora-rs has been accepted to [**GSoC 2025 🎉**](https://summerofcode.withgoogle.com/programs/2025/organizations/dora-rs-tb), with the following [**idea list**](https://github.com/dora-rs/dora/wiki/GSoC_2025). | |||

| - \[03/25\] Add support for Zenoh for distributed dataflow. | |||

| - \[03/25\] Add support for Meta SAM2, Kokoro(TTS), Improved Qwen2.5 Performance using `llama.cpp`. | |||

| - \[02/25\] Add support for Qwen2.5(LLM), Qwen2.5-VL(VLM), outetts(TTS) | |||

| </details> | |||

| ## Support Matrix | |||

| | | dora-rs | | |||

| | --------------------------------- | ---------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | | |||

| | **APIs** | Python >= 3.7 ✅ <br> Rust ✅<br> C/C++ 🆗 <br>ROS2 >= Foxy 🆗 | | |||

| | **OS** | Linux: Arm 32 ✅ Arm 64 ✅ x64_86 ✅ <br>MacOS: Arm 64 ✅ x64_86 ✅<br>Windows: x64_86 🆗<br> Android: 🛠️ (Blocked by: https://github.com/elast0ny/shared_memory/issues/32) <br> IOS: 🛠️ | | |||

| | **Message Format** | Arrow ✅ <br> Standard Specification 🛠️ | | |||

| | **Local Communication** | Shared Memory ✅ <br> [Cuda IPC](https://arrow.apache.org/docs/python/api/cuda.html) 📐 | | |||

| | **Remote Communication** | [Zenoh](https://zenoh.io/) 📐 | | |||

| | **Metrics, Tracing, and Logging** | Opentelemetry 📐 | | |||

| | **Configuration** | YAML ✅ | | |||

| | **Package Manager** | [pip](https://pypi.org/): Python Node ✅ Rust Node ✅ C/C++ Node 🛠️ <br>[cargo](https://crates.io/): Rust Node ✅ | | |||

| | | dora-rs | | |||

| | --------------------------------- | -------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------- | | |||

| | **APIs** | Python >= 3.7 including sync ⭐✅ <br> Rust ✅<br> C/C++ 🆗 <br>ROS2 >= Foxy 🆗 | | |||

| | **OS** | Linux: Arm 32 ⭐✅ Arm 64 ⭐✅ x64_86 ⭐✅ <br>MacOS: Arm 64 ⭐✅ x64_86 ✅<br>Windows: x64_86 🆗 <br>WSL: x64_86 🆗 <br> Android: 🛠️ (Blocked by: https://github.com/elast0ny/shared_memory/issues/32) <br> IOS: 🛠️ | | |||

| | **Message Format** | Arrow ✅ <br> Standard Specification 🛠️ | | |||

| | **Local Communication** | Shared Memory ✅ <br> [Cuda IPC](https://arrow.apache.org/docs/python/api/cuda.html) 📐 | | |||

| | **Remote Communication** | [Zenoh](https://zenoh.io/) 📐 | | |||

| | **Metrics, Tracing, and Logging** | Opentelemetry 📐 | | |||

| | **Configuration** | YAML ✅ | | |||

| | **Package Manager** | [pip](https://pypi.org/): Python Node ✅ Rust Node ✅ C/C++ Node 🛠️ <br>[cargo](https://crates.io/): Rust Node ✅ | | |||

| > - ⭐ = Recommended | |||

| > - ✅ = First Class Support | |||

| > - 🆗 = Best Effort Support | |||

| > - 📐 = Experimental and looking for contributions | |||

| @@ -172,13 +180,13 @@ cargo install dora-cli | |||

| ### With Github release for macOS and Linux | |||

| ```bash | |||

| curl --proto '=https' --tlsv1.2 -sSf https://raw.githubusercontent.com/dora-rs/dora/main/install.sh | bash | |||

| curl --proto '=https' --tlsv1.2 -LsSf https://github.com/dora-rs/dora/releases/latest/download/dora-cli-installer.sh | sh | |||

| ``` | |||

| ### With Github release for Windows | |||

| ```powershell | |||

| powershell -c "irm https://raw.githubusercontent.com/dora-rs/dora/main/install.ps1 | iex" | |||

| powershell -ExecutionPolicy ByPass -c "irm https://github.com/dora-rs/dorareleases/latest/download/dora-cli-installer.ps1 | iex" | |||

| ``` | |||

| ### With Source | |||

| @@ -331,3 +339,15 @@ We also have [a contributing guide](CONTRIBUTING.md). | |||

| ## License | |||

| This project is licensed under Apache-2.0. Check out [NOTICE.md](NOTICE.md) for more information. | |||

| --- | |||

| ## Further Resources 📚 | |||

| - [Zenoh Documentation](https://zenoh.io/docs/getting-started/first-app/) | |||

| - [DORA Zenoh Discussion (GitHub Issue #512)](https://github.com/dora-rs/dora/issues/512) | |||

| - [Dora Autoware Localization Demo](https://github.com/dora-rs/dora-autoware-localization-demo) | |||

| ``` | |||

| ``` | |||

+ 43

- 47

apis/python/node/dora/cuda.py

View File

| @@ -8,13 +8,19 @@ from numba.cuda import to_device | |||

| # Make sure to install numba with cuda | |||

| from numba.cuda.cudadrv.devicearray import DeviceNDArray | |||

| from numba.cuda.cudadrv.devices import get_context | |||

| from numba.cuda.cudadrv.driver import IpcHandle | |||

| # To install pyarrow.cuda, run `conda install pyarrow "arrow-cpp-proc=*=cuda" -c conda-forge` | |||

| from pyarrow import cuda | |||

| import json | |||

| from contextlib import contextmanager | |||

| from typing import ContextManager | |||

| def torch_to_ipc_buffer(tensor: torch.TensorType) -> tuple[pa.array, dict]: | |||

| """Convert a Pytorch tensor into a pyarrow buffer containing the IPC handle and its metadata. | |||

| """Convert a Pytorch tensor into a pyarrow buffer containing the IPC handle | |||

| and its metadata. | |||

| Example Use: | |||

| ```python | |||

| @@ -24,75 +30,65 @@ def torch_to_ipc_buffer(tensor: torch.TensorType) -> tuple[pa.array, dict]: | |||

| ``` | |||

| """ | |||

| device_arr = to_device(tensor) | |||

| cuda_buf = pa.cuda.CudaBuffer.from_numba(device_arr.gpu_data) | |||

| handle_buffer = cuda_buf.export_for_ipc().serialize() | |||

| ipch = get_context().get_ipc_handle(device_arr.gpu_data) | |||

| _, handle, size, source_info, offset = ipch.__reduce__()[1] | |||

| metadata = { | |||

| "shape": device_arr.shape, | |||

| "strides": device_arr.strides, | |||

| "dtype": device_arr.dtype.str, | |||

| "size": size, | |||

| "offset": offset, | |||

| "source_info": json.dumps(source_info), | |||

| } | |||

| return pa.array(handle_buffer, type=pa.uint8()), metadata | |||

| return pa.array(handle, pa.int8()), metadata | |||

| def ipc_buffer_to_ipc_handle(handle_buffer: pa.array) -> cuda.IpcMemHandle: | |||

| """Convert a buffer containing a serialized handler into cuda IPC MemHandle. | |||

| def ipc_buffer_to_ipc_handle(handle_buffer: pa.array, metadata: dict) -> IpcHandle: | |||

| """Convert a buffer containing a serialized handler into cuda IPC Handle. | |||

| example use: | |||

| ```python | |||

| from dora.cuda import ipc_buffer_to_ipc_handle, open_ipc_handle | |||

| import pyarrow as pa | |||

| from dora.cuda import ipc_buffer_to_ipc_handle, cudabuffer_to_torch | |||

| ctx = pa.cuda.context() | |||

| event = node.next() | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"]) | |||

| cudabuffer = ctx.open_ipc_buffer(ipc_handle) | |||

| torch_tensor = cudabuffer_to_torch(cudabuffer, event["metadata"]) # on cuda | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"], event["metadata"]) | |||

| with open_ipc_handle(ipc_handle, event["metadata"]) as tensor: | |||

| pass | |||

| ``` | |||

| """ | |||

| handle_buffer = handle_buffer.buffers()[1] | |||

| return pa.cuda.IpcMemHandle.from_buffer(handle_buffer) | |||

| handle = handle_buffer.to_pylist() | |||

| return IpcHandle._rebuild( | |||

| handle, | |||

| metadata["size"], | |||

| json.loads(metadata["source_info"]), | |||

| metadata["offset"], | |||

| ) | |||

| def cudabuffer_to_numba(buffer: cuda.CudaBuffer, metadata: dict) -> DeviceNDArray: | |||

| """Convert a pyarrow CUDA buffer to numba. | |||

| @contextmanager | |||

| def open_ipc_handle( | |||

| ipc_handle: IpcHandle, metadata: dict | |||

| ) -> ContextManager[torch.TensorType]: | |||

| """Open a CUDA IPC handle and return a Pytorch tensor. | |||

| example use: | |||

| ```python | |||

| from dora.cuda import ipc_buffer_to_ipc_handle, open_ipc_handle | |||

| import pyarrow as pa | |||

| from dora.cuda import ipc_buffer_to_ipc_handle, cudabuffer_to_torch | |||

| ctx = pa.cuda.context() | |||

| event = node.next() | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"]) | |||

| cudabuffer = ctx.open_ipc_buffer(ipc_handle) | |||

| numba_tensor = cudabuffer_to_numbda(cudabuffer, event["metadata"]) | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"], event["metadata"]) | |||

| with open_ipc_handle(ipc_handle, event["metadata"]) as tensor: | |||

| pass | |||

| ``` | |||

| """ | |||

| shape = metadata["shape"] | |||

| strides = metadata["strides"] | |||

| dtype = metadata["dtype"] | |||

| return DeviceNDArray(shape, strides, dtype, gpu_data=buffer.to_numba()) | |||

| def cudabuffer_to_torch(buffer: cuda.CudaBuffer, metadata: dict) -> torch.Tensor: | |||

| """Convert a pyarrow CUDA buffer to a torch tensor. | |||

| example use: | |||

| ```python | |||

| import pyarrow as pa | |||

| from dora.cuda import ipc_buffer_to_ipc_handle, cudabuffer_to_torch | |||

| ctx = pa.cuda.context() | |||

| event = node.next() | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"]) | |||

| cudabuffer = ctx.open_ipc_buffer(ipc_handle) | |||

| torch_tensor = cudabuffer_to_torch(cudabuffer, event["metadata"]) # on cuda | |||

| ``` | |||

| """ | |||

| return torch.as_tensor(cudabuffer_to_numba(buffer, metadata), device="cuda") | |||

| try: | |||

| buffer = ipc_handle.open(get_context()) | |||

| device_arr = DeviceNDArray(shape, strides, dtype, gpu_data=buffer) | |||

| yield torch.as_tensor(device_arr, device="cuda") | |||

| finally: | |||

| ipc_handle.close() | |||

+ 1

- 0

apis/rust/node/src/event_stream/scheduler.rs

View File

| @@ -54,6 +54,7 @@ impl Scheduler { | |||

| if let Some((size, queue)) = self.event_queues.get_mut(event_id) { | |||

| // Remove the oldest event if at limit | |||

| if &queue.len() >= size { | |||

| tracing::debug!("Discarding event for input `{event_id}` due to queue size limit"); | |||

| queue.pop_front(); | |||

| } | |||

| queue.push_back(event); | |||

+ 20

- 19

apis/rust/node/src/node/mod.rs

View File

| @@ -32,9 +32,10 @@ use std::{ | |||

| use tracing::{info, warn}; | |||

| #[cfg(feature = "metrics")] | |||

| use dora_metrics::init_meter_provider; | |||

| use dora_metrics::run_metrics_monitor; | |||

| #[cfg(feature = "tracing")] | |||

| use dora_tracing::set_up_tracing; | |||

| use dora_tracing::TracingBuilder; | |||

| use tokio::runtime::{Handle, Runtime}; | |||

| pub mod arrow_utils; | |||

| @@ -81,8 +82,12 @@ impl DoraNode { | |||

| serde_yaml::from_str(&raw).context("failed to deserialize node config")? | |||

| }; | |||

| #[cfg(feature = "tracing")] | |||

| set_up_tracing(node_config.node_id.as_ref()) | |||

| .context("failed to set up tracing subscriber")?; | |||

| { | |||

| TracingBuilder::new(node_config.node_id.as_ref()) | |||

| .build() | |||

| .wrap_err("failed to set up tracing subscriber")?; | |||

| } | |||

| Self::init(node_config) | |||

| } | |||

| @@ -156,24 +161,20 @@ impl DoraNode { | |||

| let id = format!("{}/{}", dataflow_id, node_id); | |||

| #[cfg(feature = "metrics")] | |||

| match &rt { | |||

| TokioRuntime::Runtime(rt) => rt.spawn(async { | |||

| if let Err(e) = init_meter_provider(id) | |||

| .await | |||

| .context("failed to init metrics provider") | |||

| { | |||

| warn!("could not create metric provider with err: {:#?}", e); | |||

| } | |||

| }), | |||

| TokioRuntime::Handle(handle) => handle.spawn(async { | |||

| if let Err(e) = init_meter_provider(id) | |||

| { | |||

| let monitor_task = async move { | |||

| if let Err(e) = run_metrics_monitor(id.clone()) | |||

| .await | |||

| .context("failed to init metrics provider") | |||

| .wrap_err("metrics monitor exited unexpectedly") | |||

| { | |||

| warn!("could not create metric provider with err: {:#?}", e); | |||

| warn!("metrics monitor failed: {:#?}", e); | |||

| } | |||

| }), | |||

| }; | |||

| }; | |||

| match &rt { | |||

| TokioRuntime::Runtime(rt) => rt.spawn(monitor_task), | |||

| TokioRuntime::Handle(handle) => handle.spawn(monitor_task), | |||

| }; | |||

| } | |||

| let event_stream = EventStream::init( | |||

| dataflow_id, | |||

+ 7

- 1

benches/llms/README.md

View File

| @@ -1,6 +1,12 @@ | |||

| # Benchmark LLM Speed | |||

| Use the following command to run the benchmark: | |||

| If you do not have a python virtual environment setup run | |||

| '''bash | |||

| uv venv --seed -p 3.11 | |||

| ''' | |||

| Then Use the following command to run the benchmark: | |||

| ```bash | |||

| dora build transformers.yaml --uv | |||

+ 2

- 0

benches/llms/llama_cpp_python.yaml

View File

| @@ -1,5 +1,7 @@ | |||

| nodes: | |||

| - id: benchmark_script | |||

| build: | | |||

| pip install ../mllm | |||

| path: ../mllm/benchmark_script.py | |||

| inputs: | |||

| text: llm/text | |||

+ 2

- 0

benches/llms/mistralrs.yaml

View File

| @@ -1,5 +1,7 @@ | |||

| nodes: | |||

| - id: benchmark_script | |||

| build: | | |||

| pip install ../mllm | |||

| path: ../mllm/benchmark_script.py | |||

| inputs: | |||

| text: llm/text | |||

+ 2

- 0

benches/llms/phi4.yaml

View File

| @@ -1,5 +1,7 @@ | |||

| nodes: | |||

| - id: benchmark_script | |||

| build: | | |||

| pip install ../mllm | |||

| path: ../mllm/benchmark_script.py | |||

| inputs: | |||

| text: llm/text | |||

+ 2

- 0

benches/llms/qwen2.5.yaml

View File

| @@ -1,5 +1,7 @@ | |||

| nodes: | |||

| - id: benchmark_script | |||

| build: | | |||

| pip install ../mllm | |||

| path: ../mllm/benchmark_script.py | |||

| inputs: | |||

| text: llm/text | |||

+ 2

- 0

benches/llms/transformers.yaml

View File

| @@ -1,5 +1,7 @@ | |||

| nodes: | |||

| - id: benchmark_script | |||

| build: | | |||

| pip install ../mllm | |||

| path: ../mllm/benchmark_script.py | |||

| inputs: | |||

| text: llm/text | |||

+ 22

- 0

benches/mllm/pyproject.toml

View File

| @@ -0,0 +1,22 @@ | |||

| [project] | |||

| name = "dora-bench" | |||

| version = "0.1.0" | |||

| description = "Script to benchmark performance of llms while using dora" | |||

| authors = [{ name = "Haixuan Xavier Tao", email = "tao.xavier@outlook.com" }] | |||

| license = { text = "MIT" } | |||

| readme = "README.md" | |||

| requires-python = ">=3.11" | |||

| dependencies = [ | |||

| "dora-rs>=0.3.9", | |||

| "librosa>=0.10.0", | |||

| "opencv-python>=4.8", | |||

| "Pillow>=10", | |||

| ] | |||

| [project.scripts] | |||

| dora-benches = "benchmark_script.main:main" | |||

| [build-system] | |||

| requires = ["setuptools>=61", "wheel"] | |||

| build-backend = "setuptools.build_meta" | |||

+ 1

- 0

binaries/cli/Cargo.toml

View File

| @@ -60,6 +60,7 @@ pyo3 = { workspace = true, features = [ | |||

| "extension-module", | |||

| "abi3", | |||

| ], optional = true } | |||

| self-replace = "1.5.0" | |||

| dunce = "1.0.5" | |||

| git2 = { workspace = true } | |||

+ 91

- 21

binaries/cli/src/lib.rs

View File

| @@ -15,8 +15,7 @@ use dora_message::{ | |||

| coordinator_to_cli::{ControlRequestReply, DataflowList, DataflowResult, DataflowStatus}, | |||

| }; | |||

| #[cfg(feature = "tracing")] | |||

| use dora_tracing::set_up_tracing; | |||

| use dora_tracing::{set_up_tracing_opts, FileLogging}; | |||

| use dora_tracing::TracingBuilder; | |||

| use duration_str::parse; | |||

| use eyre::{bail, Context}; | |||

| use formatting::FormatDataflowError; | |||

| @@ -246,7 +245,7 @@ enum Command { | |||

| #[clap(long)] | |||

| quiet: bool, | |||

| }, | |||

| /// Dora CLI self-management commands | |||

| Self_ { | |||

| #[clap(subcommand)] | |||

| command: SelfSubCommand, | |||

| @@ -255,11 +254,18 @@ enum Command { | |||

| #[derive(Debug, clap::Subcommand)] | |||

| enum SelfSubCommand { | |||

| /// Check for updates or update the CLI | |||

| Update { | |||

| /// Only check for updates without installing | |||

| #[clap(long)] | |||

| check_only: bool, | |||

| }, | |||

| /// Remove The Dora CLI from the system | |||

| Uninstall { | |||

| /// Force uninstallation without confirmation | |||

| #[clap(long)] | |||

| force: bool, | |||

| }, | |||

| } | |||

| #[derive(Debug, clap::Args)] | |||

| @@ -310,34 +316,42 @@ fn run_cli(args: Args) -> eyre::Result<()> { | |||

| .as_ref() | |||

| .map(|id| format!("{name}-{id}")) | |||

| .unwrap_or(name.to_string()); | |||

| let stdout = (!quiet).then_some("info,zenoh=warn"); | |||

| let file = Some(FileLogging { | |||

| file_name: filename, | |||

| filter: LevelFilter::INFO, | |||

| }); | |||

| set_up_tracing_opts(name, stdout, file) | |||

| .context("failed to set up tracing subscriber")?; | |||

| let mut builder = TracingBuilder::new(name); | |||

| if !quiet { | |||

| builder = builder.with_stdout("info,zenoh=warn"); | |||

| } | |||

| builder = builder.with_file(filename, LevelFilter::INFO)?; | |||

| builder | |||

| .build() | |||

| .wrap_err("failed to set up tracing subscriber")?; | |||

| } | |||

| Command::Runtime => { | |||

| // Do not set the runtime in the cli. | |||

| } | |||

| Command::Coordinator { quiet, .. } => { | |||

| let name = "dora-coordinator"; | |||

| let stdout = (!quiet).then_some("info"); | |||

| let file = Some(FileLogging { | |||

| file_name: name.to_owned(), | |||

| filter: LevelFilter::INFO, | |||

| }); | |||

| set_up_tracing_opts(name, stdout, file) | |||

| .context("failed to set up tracing subscriber")?; | |||

| let mut builder = TracingBuilder::new(name); | |||

| if !quiet { | |||

| builder = builder.with_stdout("info"); | |||

| } | |||

| builder = builder.with_file(name, LevelFilter::INFO)?; | |||

| builder | |||

| .build() | |||

| .wrap_err("failed to set up tracing subscriber")?; | |||

| } | |||

| Command::Run { .. } => { | |||

| let log_level = std::env::var("RUST_LOG").ok().or(Some("info".to_string())); | |||

| set_up_tracing_opts("run", log_level.as_deref(), None) | |||

| .context("failed to set up tracing subscriber")?; | |||

| let log_level = std::env::var("RUST_LOG").ok().unwrap_or("info".to_string()); | |||

| TracingBuilder::new("run") | |||

| .with_stdout(log_level) | |||

| .build() | |||

| .wrap_err("failed to set up tracing subscriber")?; | |||

| } | |||

| _ => { | |||

| set_up_tracing("dora-cli").context("failed to set up tracing subscriber")?; | |||

| TracingBuilder::new("dora-cli") | |||

| .with_stdout("warn") | |||

| .build() | |||

| .wrap_err("failed to set up tracing subscriber")?; | |||

| } | |||

| }; | |||

| @@ -574,6 +588,62 @@ fn run_cli(args: Args) -> eyre::Result<()> { | |||

| } | |||

| } | |||

| } | |||

| SelfSubCommand::Uninstall { force } => { | |||

| if !force { | |||

| let confirmed = | |||

| inquire::Confirm::new("Are you sure you want to uninstall Dora CLI?") | |||

| .with_default(false) | |||

| .prompt() | |||

| .wrap_err("Uninstallation cancelled")?; | |||

| if !confirmed { | |||

| println!("Uninstallation cancelled"); | |||

| return Ok(()); | |||

| } | |||

| } | |||

| println!("Uninstalling Dora CLI..."); | |||

| #[cfg(feature = "python")] | |||

| { | |||

| println!("Detected Python installation..."); | |||

| // Try uv pip uninstall first | |||

| let uv_status = std::process::Command::new("uv") | |||

| .args(["pip", "uninstall", "dora-rs-cli"]) | |||

| .status(); | |||

| if let Ok(status) = uv_status { | |||

| if status.success() { | |||

| println!("Dora CLI has been successfully uninstalled via uv pip."); | |||

| return Ok(()); | |||

| } | |||

| } | |||

| // Fall back to regular pip uninstall | |||

| println!("Trying with pip..."); | |||

| let status = std::process::Command::new("pip") | |||

| .args(["uninstall", "-y", "dora-rs-cli"]) | |||

| .status() | |||

| .wrap_err("Failed to run pip uninstall")?; | |||

| if status.success() { | |||

| println!("Dora CLI has been successfully uninstalled via pip."); | |||

| } else { | |||

| bail!("Failed to uninstall Dora CLI via pip."); | |||

| } | |||

| } | |||

| #[cfg(not(feature = "python"))] | |||

| { | |||

| match self_replace::self_delete() { | |||

| Ok(_) => { | |||

| println!("Dora CLI has been successfully uninstalled."); | |||

| } | |||

| Err(e) => { | |||

| bail!("Failed to uninstall Dora CLI: {}", e); | |||

| } | |||

| } | |||

| } | |||

| } | |||

| }, | |||

| }; | |||

+ 91

- 12

binaries/daemon/src/lib.rs

View File

| @@ -287,21 +287,99 @@ impl Daemon { | |||

| None => None, | |||

| }; | |||

| let zenoh_config = match std::env::var(zenoh::Config::DEFAULT_CONFIG_PATH_ENV) { | |||

| Ok(path) => zenoh::Config::from_file(&path) | |||

| .map_err(|e| eyre!(e)) | |||

| .wrap_err_with(|| format!("failed to read zenoh config from {path}"))?, | |||

| Err(std::env::VarError::NotPresent) => zenoh::Config::default(), | |||

| let zenoh_session = match std::env::var(zenoh::Config::DEFAULT_CONFIG_PATH_ENV) { | |||

| Ok(path) => { | |||

| let zenoh_config = zenoh::Config::from_file(&path) | |||

| .map_err(|e| eyre!(e)) | |||

| .wrap_err_with(|| format!("failed to read zenoh config from {path}"))?; | |||

| zenoh::open(zenoh_config) | |||

| .await | |||

| .map_err(|e| eyre!(e)) | |||

| .context("failed to open zenoh session")? | |||

| } | |||

| Err(std::env::VarError::NotPresent) => { | |||

| let mut zenoh_config = zenoh::Config::default(); | |||

| if let Some(addr) = coordinator_addr { | |||

| // Linkstate make it possible to connect two daemons on different network through a public daemon | |||

| // TODO: There is currently a CI/CD Error in windows linkstate. | |||

| if cfg!(not(target_os = "windows")) { | |||

| zenoh_config | |||

| .insert_json5("routing/peer", r#"{ mode: "linkstate" }"#) | |||

| .unwrap(); | |||

| } | |||

| zenoh_config | |||

| .insert_json5( | |||

| "connect/endpoints", | |||

| &format!( | |||

| r#"{{ router: ["tcp/[::]:7447"], peer: ["tcp/{}:5456"] }}"#, | |||

| addr.ip() | |||

| ), | |||

| ) | |||

| .unwrap(); | |||

| zenoh_config | |||

| .insert_json5( | |||

| "listen/endpoints", | |||

| r#"{ router: ["tcp/[::]:7447"], peer: ["tcp/[::]:5456"] }"#, | |||

| ) | |||

| .unwrap(); | |||

| if cfg!(target_os = "macos") { | |||

| warn!("disabling multicast on macos systems. Enable it with the ZENOH_CONFIG env variable or file"); | |||

| zenoh_config | |||

| .insert_json5("scouting/multicast", r#"{ enabled: false }"#) | |||

| .unwrap(); | |||

| } | |||

| }; | |||

| if let Ok(zenoh_session) = zenoh::open(zenoh_config).await { | |||

| zenoh_session | |||

| } else { | |||

| warn!( | |||

| "failed to open zenoh session, retrying with default config + coordinator" | |||

| ); | |||

| let mut zenoh_config = zenoh::Config::default(); | |||

| // Linkstate make it possible to connect two daemons on different network through a public daemon | |||

| // TODO: There is currently a CI/CD Error in windows linkstate. | |||

| if cfg!(not(target_os = "windows")) { | |||

| zenoh_config | |||

| .insert_json5("routing/peer", r#"{ mode: "linkstate" }"#) | |||

| .unwrap(); | |||

| } | |||

| if let Some(addr) = coordinator_addr { | |||

| zenoh_config | |||

| .insert_json5( | |||

| "connect/endpoints", | |||

| &format!( | |||

| r#"{{ router: ["tcp/[::]:7447"], peer: ["tcp/{}:5456"] }}"#, | |||

| addr.ip() | |||

| ), | |||

| ) | |||

| .unwrap(); | |||

| if cfg!(target_os = "macos") { | |||

| warn!("disabling multicast on macos systems. Enable it with the ZENOH_CONFIG env variable or file"); | |||

| zenoh_config | |||

| .insert_json5("scouting/multicast", r#"{ enabled: false }"#) | |||

| .unwrap(); | |||

| } | |||

| } | |||

| if let Ok(zenoh_session) = zenoh::open(zenoh_config).await { | |||

| zenoh_session | |||

| } else { | |||

| warn!("failed to open zenoh session, retrying with default config"); | |||

| let zenoh_config = zenoh::Config::default(); | |||

| zenoh::open(zenoh_config) | |||

| .await | |||

| .map_err(|e| eyre!(e)) | |||

| .context("failed to open zenoh session")? | |||

| } | |||

| } | |||

| } | |||

| Err(std::env::VarError::NotUnicode(_)) => eyre::bail!( | |||

| "{} env variable is not valid unicode", | |||

| zenoh::Config::DEFAULT_CONFIG_PATH_ENV | |||

| ), | |||

| }; | |||

| let zenoh_session = zenoh::open(zenoh_config) | |||

| .await | |||

| .map_err(|e| eyre!(e)) | |||

| .context("failed to open zenoh session")?; | |||

| let (dora_events_tx, dora_events_rx) = mpsc::channel(5); | |||

| let daemon = Self { | |||

| logger: Logger { | |||

| @@ -2210,10 +2288,11 @@ impl ProcessId { | |||

| pub fn kill(&mut self) -> bool { | |||

| if let Some(pid) = self.0 { | |||

| let pid = Pid::from(pid as usize); | |||

| let mut system = sysinfo::System::new(); | |||

| system.refresh_processes(); | |||

| system.refresh_process(pid); | |||

| if let Some(process) = system.process(Pid::from(pid as usize)) { | |||

| if let Some(process) = system.process(pid) { | |||

| process.kill(); | |||

| self.mark_as_stopped(); | |||

| return true; | |||

binaries/daemon/src/spawn/mod.rs → binaries/daemon/src/spawn.rs

View File

| @@ -28,7 +28,7 @@ use dora_node_api::{ | |||

| arrow_utils::{copy_array_into_sample, required_data_size}, | |||

| Metadata, | |||

| }; | |||

| use eyre::{ContextCompat, WrapErr}; | |||

| use eyre::{bail, ContextCompat, WrapErr}; | |||

| use std::{ | |||

| future::Future, | |||

| path::{Path, PathBuf}, | |||

| @@ -201,14 +201,14 @@ impl Spawner { | |||

| { | |||

| let conda = which::which("conda").context( | |||

| "failed to find `conda`, yet a `conda_env` was defined. Make sure that `conda` is available.", | |||

| )?; | |||

| )?; | |||

| let mut command = tokio::process::Command::new(conda); | |||

| command.args([ | |||

| "run", | |||

| "-n", | |||

| conda_env, | |||

| "python", | |||

| "-c", | |||

| "-uc", | |||

| format!("import dora; dora.start_runtime() # {}", node.id).as_str(), | |||

| ]); | |||

| Some(command) | |||

| @@ -234,20 +234,51 @@ impl Spawner { | |||

| }; | |||

| // Force python to always flush stdout/stderr buffer | |||

| cmd.args([ | |||

| "-c", | |||

| "-uc", | |||

| format!("import dora; dora.start_runtime() # {}", node.id).as_str(), | |||

| ]); | |||

| Some(cmd) | |||

| } | |||

| } else if python_operators.is_empty() && other_operators { | |||

| let mut cmd = tokio::process::Command::new( | |||

| std::env::current_exe() | |||

| .wrap_err("failed to get current executable path")?, | |||

| ); | |||

| cmd.arg("runtime"); | |||

| Some(cmd) | |||

| let current_exe = std::env::current_exe() | |||

| .wrap_err("failed to get current executable path")?; | |||

| let mut file_name = current_exe.clone(); | |||

| file_name.set_extension(""); | |||

| let file_name = file_name | |||

| .file_name() | |||

| .and_then(|s| s.to_str()) | |||

| .context("failed to get file name from current executable")?; | |||

| // Check if the current executable is a python binary meaning that dora is installed within the python environment | |||

| if file_name.ends_with("python") || file_name.ends_with("python3") { | |||

| // Use the current executable to spawn runtime | |||

| let python = get_python_path() | |||

| .wrap_err("Could not find python path when spawning custom node")?; | |||

| let mut cmd = tokio::process::Command::new(python); | |||

| tracing::info!( | |||

| "spawning: python -uc import dora; dora.start_runtime() # {}", | |||

| node.id | |||

| ); | |||

| cmd.args([ | |||

| "-uc", | |||

| format!("import dora; dora.start_runtime() # {}", node.id).as_str(), | |||

| ]); | |||

| Some(cmd) | |||

| } else { | |||

| let mut cmd = tokio::process::Command::new( | |||

| std::env::current_exe() | |||

| .wrap_err("failed to get current executable path")?, | |||

| ); | |||

| cmd.arg("runtime"); | |||

| Some(cmd) | |||

| } | |||

| } else { | |||

| eyre::bail!("Runtime can not mix Python Operator with other type of operator."); | |||

| bail!( | |||

| "Cannot spawn runtime with both Python and non-Python operators. \ | |||

| Please use a single operator or ensure that all operators are Python-based." | |||

| ); | |||

| }; | |||

| let runtime_config = RuntimeConfig { | |||

+ 9

- 5

binaries/runtime/src/lib.rs

View File

| @@ -5,15 +5,14 @@ use dora_core::{ | |||

| descriptor::OperatorConfig, | |||

| }; | |||

| use dora_message::daemon_to_node::{NodeConfig, RuntimeConfig}; | |||

| use dora_metrics::init_meter_provider; | |||

| use dora_metrics::run_metrics_monitor; | |||

| use dora_node_api::{DoraNode, Event}; | |||

| use dora_tracing::TracingBuilder; | |||

| use eyre::{bail, Context, Result}; | |||

| use futures::{Stream, StreamExt}; | |||

| use futures_concurrency::stream::Merge; | |||

| use operator::{run_operator, OperatorEvent, StopReason}; | |||

| #[cfg(feature = "tracing")] | |||

| use dora_tracing::set_up_tracing; | |||

| use std::{ | |||

| collections::{BTreeMap, BTreeSet, HashMap}, | |||

| mem, | |||

| @@ -37,7 +36,12 @@ pub fn main() -> eyre::Result<()> { | |||

| } = config; | |||

| let node_id = config.node_id.clone(); | |||

| #[cfg(feature = "tracing")] | |||

| set_up_tracing(node_id.as_ref()).context("failed to set up tracing subscriber")?; | |||

| { | |||

| TracingBuilder::new(node_id.as_ref()) | |||

| .with_stdout("warn") | |||

| .build() | |||

| .wrap_err("failed to set up tracing subscriber")?; | |||

| } | |||

| let dataflow_descriptor = config.dataflow_descriptor.clone(); | |||

| @@ -123,7 +127,7 @@ async fn run( | |||

| init_done: oneshot::Receiver<Result<()>>, | |||

| ) -> eyre::Result<()> { | |||

| #[cfg(feature = "metrics")] | |||

| let _meter_provider = init_meter_provider(config.node_id.to_string()); | |||

| let _meter_provider = run_metrics_monitor(config.node_id.to_string()); | |||

| init_done | |||

| .await | |||

| .wrap_err("the `init_done` channel was closed unexpectedly")? | |||

+ 62

- 0

examples/av1-encoding/dataflow.yml

View File

| @@ -0,0 +1,62 @@ | |||

| nodes: | |||

| - id: camera | |||

| build: pip install ../../node-hub/opencv-video-capture | |||

| path: opencv-video-capture | |||

| _unstable_deploy: | |||

| machine: encoder | |||

| inputs: | |||

| tick: dora/timer/millis/50 | |||

| outputs: | |||

| - image | |||

| env: | |||

| CAPTURE_PATH: 0 | |||

| IMAGE_WIDTH: 1280 | |||

| IMAGE_HEIGHT: 720 | |||

| - id: rav1e-local | |||

| path: dora-rav1e | |||

| build: cargo build -p dora-rav1e --release | |||

| _unstable_deploy: | |||

| machine: encoder | |||

| inputs: | |||

| image: camera/image | |||

| outputs: | |||

| - image | |||

| - id: dav1d-remote | |||

| path: dora-dav1d | |||

| build: cargo build -p dora-dav1d --release | |||

| _unstable_deploy: | |||

| machine: decoder | |||

| inputs: | |||

| image: rav1e-local/image | |||

| outputs: | |||

| - image | |||

| - id: rav1e-remote | |||

| path: dora-rav1e | |||

| build: cargo build -p dora-rav1e --release | |||

| _unstable_deploy: | |||

| machine: decoder | |||

| inputs: | |||

| image: dav1d-remote/image | |||

| outputs: | |||

| - image | |||

| - id: dav1d-local | |||

| path: dora-dav1d | |||

| build: cargo build -p dora-dav1d --release | |||

| _unstable_deploy: | |||

| machine: encoder | |||

| inputs: | |||

| image: rav1e-remote/image | |||

| outputs: | |||

| - image | |||

| - id: plot | |||

| build: pip install -e ../../node-hub/dora-rerun | |||

| _unstable_deploy: | |||

| machine: encoder | |||

| path: dora-rerun | |||

| inputs: | |||

| image_decode: dav1d-local/image | |||

+ 68

- 0

examples/av1-encoding/dataflow_reachy.yml

View File

| @@ -0,0 +1,68 @@ | |||

| nodes: | |||

| - id: camera | |||

| path: dora-reachy2-camera | |||

| _unstable_deploy: | |||

| machine: encoder | |||

| inputs: | |||

| tick: dora/timer/millis/50 | |||

| outputs: | |||

| - image_right | |||

| - image_left | |||

| - image_depth | |||

| - depth | |||

| env: | |||

| CAPTURE_PATH: 0 | |||

| IMAGE_WIDTH: 640 | |||

| IMAGE_HEIGHT: 480 | |||

| ROBOT_IP: 127.0.0.1 | |||

| - id: rav1e-local | |||

| path: dora-rav1e | |||

| build: cargo build -p dora-rav1e --release | |||

| _unstable_deploy: | |||

| machine: encoder | |||

| inputs: | |||

| depth: camera/depth | |||

| outputs: | |||

| - depth | |||

| env: | |||

| RAV1E_SPEED: 7 | |||

| - id: rav1e-local-image | |||

| path: dora-rav1e | |||

| build: cargo build -p dora-rav1e --release | |||

| _unstable_deploy: | |||

| machine: encoder | |||

| inputs: | |||

| image_depth: camera/image_depth | |||

| image_left: camera/image_left | |||

| outputs: | |||

| - image_left | |||

| - image_depth | |||

| - depth | |||

| env: | |||

| RAV1E_SPEED: 10 | |||

| - id: dav1d-remote | |||

| path: dora-dav1d | |||

| build: cargo build -p dora-dav1d --release | |||

| _unstable_deploy: | |||

| machine: plot | |||

| inputs: | |||

| image_depth: rav1e-local-image/image_depth | |||

| image_left: rav1e-local-image/image_left | |||

| depth: rav1e-local/depth | |||

| outputs: | |||

| - image_left | |||

| - image_depth | |||

| - depth | |||

| - id: plot | |||

| build: pip install -e ../../node-hub/dora-rerun | |||

| _unstable_deploy: | |||

| machine: plot | |||

| path: dora-rerun | |||

| inputs: | |||

| image: dav1d-remote/image_depth | |||

| depth: dav1d-remote/depth | |||

| image_left: dav1d-remote/image_left | |||

+ 54

- 0

examples/av1-encoding/ios-dev.yaml

View File

| @@ -0,0 +1,54 @@ | |||

| nodes: | |||

| - id: camera | |||

| build: pip install -e ../../node-hub/dora-ios-lidar | |||

| path: dora-ios-lidar | |||

| inputs: | |||

| tick: dora/timer/millis/20 | |||

| outputs: | |||

| - image | |||

| - depth | |||

| env: | |||

| IMAGE_WIDTH: 1280 | |||

| IMAGE_HEIGHT: 720 | |||

| ROTATE: ROTATE_90_CLOCKWISE | |||

| - id: rav1e-local | |||

| path: dora-rav1e | |||

| build: cargo build -p dora-rav1e --release | |||

| inputs: | |||

| image: camera/image | |||

| outputs: | |||

| - image | |||

| env: | |||

| RAV1E_SPEED: 10 | |||

| - id: rav1e-local-depth | |||

| path: dora-rav1e | |||

| build: cargo build -p dora-rav1e --release | |||

| inputs: | |||

| depth: camera/depth | |||

| outputs: | |||

| - depth | |||

| env: | |||

| RAV1E_SPEED: 10 | |||

| - id: dav1d-local-depth | |||

| path: dora-dav1d | |||

| build: cargo build -p dora-dav1d --release | |||

| inputs: | |||

| depth: rav1e-local-depth/depth | |||

| outputs: | |||

| - depth | |||

| - id: dav1d-local | |||

| path: dora-dav1d | |||

| build: cargo build -p dora-dav1d --release | |||

| inputs: | |||

| image: rav1e-local/image | |||

| outputs: | |||

| - image | |||

| - id: plot | |||

| build: pip install -e ../../node-hub/dora-rerun | |||

| path: dora-rerun | |||

| inputs: | |||

| image: dav1d-local/image | |||

| depth: dav1d-local-depth/depth | |||

+ 3

- 1

examples/benchmark/dataflow.yml

View File

| @@ -11,4 +11,6 @@ nodes: | |||

| path: ../../target/release/benchmark-example-sink | |||

| inputs: | |||

| latency: rust-node/latency | |||

| throughput: rust-node/throughput | |||

| throughput: | |||

| source: rust-node/throughput | |||

| queue_size: 1000 | |||

+ 18

- 27

examples/benchmark/node/src/main.rs

View File

| @@ -1,7 +1,6 @@ | |||

| use dora_node_api::{self, dora_core::config::DataId, DoraNode}; | |||

| use eyre::{Context, ContextCompat}; | |||

| use rand::Rng; | |||

| use std::collections::HashMap; | |||

| use eyre::Context; | |||

| use rand::RngCore; | |||

| use std::time::Duration; | |||

| use tracing_subscriber::Layer; | |||

| @@ -25,26 +24,17 @@ fn main() -> eyre::Result<()> { | |||

| 1000 * 4096, | |||

| ]; | |||

| let mut data = HashMap::new(); | |||

| for size in sizes { | |||

| let vec: Vec<u8> = rand::thread_rng() | |||

| .sample_iter(rand::distributions::Standard) | |||

| .take(size) | |||

| .collect(); | |||

| data.insert(size, vec); | |||

| } | |||

| let data = sizes.map(|size| { | |||

| let mut data = vec![0u8; size]; | |||

| rand::thread_rng().fill_bytes(&mut data); | |||

| data | |||

| }); | |||

| // test latency first | |||

| for size in sizes { | |||

| for _ in 0..100 { | |||

| let data = data.get(&size).wrap_err(eyre::Report::msg(format!( | |||

| "data not found for size {}", | |||

| size | |||

| )))?; | |||

| for data in &data { | |||

| for _ in 0..1 { | |||

| node.send_output_raw(latency.clone(), Default::default(), data.len(), |out| { | |||

| out.copy_from_slice(data); | |||

| out.copy_from_slice(&data); | |||

| })?; | |||

| // sleep a bit to avoid queue buildup | |||

| @@ -56,17 +46,18 @@ fn main() -> eyre::Result<()> { | |||

| std::thread::sleep(Duration::from_secs(2)); | |||

| // then throughput with full speed | |||

| for size in sizes { | |||

| for data in &data { | |||

| for _ in 0..100 { | |||

| let data = data.get(&size).wrap_err(eyre::Report::msg(format!( | |||

| "data not found for size {}", | |||

| size | |||

| )))?; | |||

| node.send_output_raw(throughput.clone(), Default::default(), data.len(), |out| { | |||

| out.copy_from_slice(data); | |||

| out.copy_from_slice(&data); | |||

| })?; | |||

| } | |||

| // notify sink that all messages have been sent | |||

| node.send_output_raw(throughput.clone(), Default::default(), 1, |out| { | |||

| out.copy_from_slice(&[1]); | |||

| })?; | |||

| std::thread::sleep(Duration::from_secs(2)); | |||

| } | |||

| Ok(()) | |||

+ 2

- 3

examples/benchmark/sink/src/main.rs

View File

| @@ -24,7 +24,8 @@ fn main() -> eyre::Result<()> { | |||

| // check if new size bracket | |||

| let data_len = data.len(); | |||

| if data_len != current_size { | |||

| if n > 0 { | |||

| // data of length 1 is used to sync | |||

| if n > 0 && current_size != 1 { | |||

| record_results(start, current_size, n, latencies, latency); | |||

| } | |||

| current_size = data_len; | |||

| @@ -63,8 +64,6 @@ fn main() -> eyre::Result<()> { | |||

| } | |||

| } | |||

| record_results(start, current_size, n, latencies, latency); | |||

| Ok(()) | |||

| } | |||

+ 11

- 12

examples/cuda-benchmark/demo_receiver.py

View File

| @@ -1,7 +1,6 @@ | |||

| #!/usr/bin/env python | |||

| """TODO: Add docstring.""" | |||

| import os | |||

| import time | |||

| @@ -9,7 +8,7 @@ import numpy as np | |||

| import pyarrow as pa | |||

| import torch | |||

| from dora import Node | |||

| from dora.cuda import cudabuffer_to_torch, ipc_buffer_to_ipc_handle | |||

| from dora.cuda import ipc_buffer_to_ipc_handle, open_ipc_handle | |||

| from helper import record_results | |||

| from tqdm import tqdm | |||

| @@ -17,7 +16,6 @@ torch.tensor([], device="cuda") | |||

| pa.array([]) | |||

| context = pa.cuda.Context() | |||

| node = Node("node_2") | |||

| current_size = 8 | |||

| @@ -29,8 +27,6 @@ DEVICE = os.getenv("DEVICE", "cuda") | |||

| NAME = f"dora torch {DEVICE}" | |||

| ctx = pa.cuda.Context() | |||

| print() | |||

| print("Receiving 40MB packets using default dora-rs") | |||

| @@ -49,13 +45,13 @@ while True: | |||

| if event["metadata"]["device"] != "cuda": | |||

| # BEFORE | |||

| handle = event["value"].to_numpy() | |||

| scope = None | |||

| torch_tensor = torch.tensor(handle, device="cuda") | |||

| else: | |||

| # AFTER | |||

| # storage needs to be spawned in the same file as where it's used. Don't ask me why. | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"]) | |||

| cudabuffer = ctx.open_ipc_buffer(ipc_handle) | |||

| torch_tensor = cudabuffer_to_torch(cudabuffer, event["metadata"]) # on cuda | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"], event["metadata"]) | |||

| scope = open_ipc_handle(ipc_handle, event["metadata"]) | |||

| torch_tensor = scope.__enter__() | |||

| else: | |||

| break | |||

| t_received = time.perf_counter_ns() | |||

| @@ -73,6 +69,9 @@ while True: | |||

| latencies = [] | |||

| n += 1 | |||

| if scope: | |||

| scope.__exit__(None, None, None) | |||

| mean_cuda = np.array(latencies).mean() | |||

| pbar.close() | |||

| @@ -81,9 +80,9 @@ time.sleep(2) | |||

| print() | |||

| print("----") | |||

| print(f"Node communication duration with default dora-rs: {mean_cpu/1000:.1f}ms") | |||

| print(f"Node communication duration with dora CUDA->CUDA: {mean_cuda/1000:.1f}ms") | |||

| print(f"Node communication duration with default dora-rs: {mean_cpu / 1000:.1f}ms") | |||

| print(f"Node communication duration with dora CUDA->CUDA: {mean_cuda / 1000:.1f}ms") | |||

| print("----") | |||

| print(f"Speed Up: {(mean_cpu)/(mean_cuda):.0f}") | |||

| print(f"Speed Up: {(mean_cpu) / (mean_cuda):.0f}") | |||

| record_results(NAME, current_size, latencies) | |||

+ 8

- 10

examples/cuda-benchmark/receiver.py

View File

| @@ -1,14 +1,13 @@ | |||

| #!/usr/bin/env python | |||

| """TODO: Add docstring.""" | |||

| import os | |||

| import time | |||

| import pyarrow as pa | |||

| import torch | |||

| from dora import Node | |||

| from dora.cuda import cudabuffer_to_torch, ipc_buffer_to_ipc_handle | |||

| from dora.cuda import ipc_buffer_to_ipc_handle, open_ipc_handle | |||

| from helper import record_results | |||

| from tqdm import tqdm | |||

| @@ -17,7 +16,6 @@ torch.tensor([], device="cuda") | |||

| pa.array([]) | |||

| pbar = tqdm(total=100) | |||

| context = pa.cuda.Context() | |||

| node = Node("node_2") | |||

| @@ -29,8 +27,6 @@ DEVICE = os.getenv("DEVICE", "cuda") | |||

| NAME = f"dora torch {DEVICE}" | |||

| ctx = pa.cuda.Context() | |||

| while True: | |||

| event = node.next() | |||

| if event["type"] == "INPUT": | |||

| @@ -40,12 +36,12 @@ while True: | |||

| # BEFORE | |||

| handle = event["value"].to_numpy() | |||

| torch_tensor = torch.tensor(handle, device="cuda") | |||

| scope = None | |||

| else: | |||

| # AFTER | |||

| # storage needs to be spawned in the same file as where it's used. Don't ask me why. | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"]) | |||

| cudabuffer = ctx.open_ipc_buffer(ipc_handle) | |||

| torch_tensor = cudabuffer_to_torch(cudabuffer, event["metadata"]) # on cuda | |||

| ipc_handle = ipc_buffer_to_ipc_handle(event["value"], event["metadata"]) | |||

| scope = open_ipc_handle(ipc_handle, event["metadata"]) | |||

| torch_tensor = scope.__enter__() | |||

| else: | |||

| break | |||

| t_received = time.perf_counter_ns() | |||

| @@ -53,7 +49,6 @@ while True: | |||

| if length != current_size: | |||

| if n > 0: | |||

| pbar.close() | |||

| pbar = tqdm(total=100) | |||

| record_results(NAME, current_size, latencies) | |||

| @@ -69,4 +64,7 @@ while True: | |||

| n += 1 | |||

| i += 1 | |||

| if scope: | |||

| scope.__exit__(None, None, None) | |||

| record_results(NAME, current_size, latencies) | |||

+ 3

- 0

examples/depth_camera/ios-dev.yaml

View File

| @@ -7,6 +7,9 @@ nodes: | |||

| outputs: | |||

| - image | |||

| - depth | |||

| env: | |||

| IMAGE_WIDTH: 640 | |||

| IMAGE_HEIGHT: 480 | |||

| - id: plot | |||

| build: pip install -e ../../node-hub/dora-rerun | |||

+ 11

- 0

examples/mediapipe/README.md

View File

| @@ -0,0 +1,11 @@ | |||

| # Mediapipe example | |||

| ## Make sure to have a webcam connected | |||

| ```bash | |||

| uv venv --seed | |||

| dora build rgb-dev.yml --uv | |||

| dora run rgb-dev.yml --uv | |||

| ## If the points are not plotted by default, you should try to add a 2d viewer within rerun. | |||

| ``` | |||

+ 26

- 0

examples/mediapipe/realsense-dev.yml

View File

| @@ -0,0 +1,26 @@ | |||

| nodes: | |||

| - id: camera | |||

| build: pip install -e ../../node-hub/dora-pyrealsense | |||

| path: dora-pyrealsense | |||

| inputs: | |||

| tick: dora/timer/millis/100 | |||